System and media recovery: Difference between revisions

m add link |

m link repair |

||

| Line 145: | Line 145: | ||

<tr> | <tr> | ||

<td>Journal facility</td> | <td>Journal facility</td> | ||

<td>Contains incremental changes that reflect updates to database files; see [[ | <td>Contains incremental changes that reflect updates to database files; see [[Tracking system activity (CCAJRNL, CCAAUDIT, CCAJLOG)]].</td> | ||

</tr> | </tr> | ||

| Line 275: | Line 275: | ||

===Back out mechanism=== | ===Back out mechanism=== | ||

<p> | <p> | ||

Transaction back out logically reverses transactions by applying compensating updates to files participating in the transaction. For example, the logical reversal or back out of a <var>STORE RECORD</var> statement is equivalent to processing a <var>DELETE RECORD</var> statement. | Transaction back out logically reverses transactions by applying compensating updates to files participating in the transaction. For example, the logical reversal or back out of a <var>STORE RECORD</var> statement is equivalent to processing a <var>DELETE RECORD</var> statement. </p> | ||

<p> | <p> | ||

Compensating updates for incomplete transactions are stored temporarily in a back out log in CCATEMP and then deleted when the transaction is committed or backed out.</p> | Compensating updates for incomplete transactions are stored temporarily in a back out log in CCATEMP and then deleted when the transaction is committed or backed out.</p> | ||

Revision as of 17:20, 9 March 2015

Overview

Hardware, software, or media failures compromise database integrity. The recovery facilities of Model 204 detect and correct integrity problems that can affect some or all Model 204 files in Online or Batch updating jobs.

This page discusses individual transaction and database recovery facilities provided by Model 204 in the event of application, system, or media failure. However, the importance of a reliable, well-maintained backup plan cannot be overemphasized; see the Preparing for media recovery section.

This page describes the SOUL statements, Model 204 commands and parameters that are coordinated and used by the recovery facilities. The recovery facilities include:

For system recovery:

- Transaction Back Out

- RESTART recovery, which utilizes:

- ROLL BACK processing

- ROLL FORWARD processing

For media recovery:

- NonStop/204 for file backups

- Processing for REGENERATE and REGENERATE ONEPASS commands, applying updates that have occurred since the file's last backup:

- ROLL FORWARD processing

- UTILJ utility

- MERGEJ utility

Other topics related to Model 204 recovery are discussed in the following places:

| Topic | Discussed in... |

|---|---|

| Keeping an audit trail by recording and printing system activity | Tracking system activity (CCAJRNL, CCAAUDIT, CCAJLOG) |

| Recovering application subsystem definitions (CCASYS) | System requirements for Application Subsystems |

| Storing preimages of changed pages and checkpoints | Performance monitoring and tuning |

| Handling CCATEMP, the system scratch file, after a system crash | Using the system scratch file (CCATEMP) |

| Using the system statistics | Using system statistics |

Consulting other Model 204 documentation

You can also consult:

- The CHECKPOINT command can request either a transaction checkpoint or a sub-transaction checkpoint. The options are TRAN, the default, for a transaction checkpoint and SUBTRAN for a sub-transaction checkpoint.

- The MONITOR command has a SUBTRAN option to display the status of sub-transaction checkpoints.

- Error diagnosis

- Methods for maintaining transaction efficiency

- Discussion of update units

- Rocket Model 204 Host Language Interface Programming Guide and Rocket Model 204 Host Language Interface Reference Manual for:

- Details regarding Host Language Interface calls, IFAM1, IFAM2, and IFAM4 applications

- Transaction behavior

- Record retrievals and transaction efficiency

Preparing for media recovery

To use media recovery effectively, an installation must establish procedures for backing up files on a regular basis and for archiving and maintaining CCAJRNL files that contain a complete history of file activity.

Media recovery requires the following preparation:

- Install the MERGEJ utility.

Installation and link-editing of MERGEJ is discussed in the installation guide for your operating system.

- Dump Model 204 files on a regular basis.

Use the Model 204 DUMP command or any other DASD management utility.

Note: If your Model 204 backups are not done using a Model 204 DUMP command, you must make certain that no one is updating the Model 204 file at the same time the backup is being performed. If you use a backup from a Model 204 dump file, updates made to the file since the dump are reapplied by the ROLL FORWARD facility of the REGENERATE command using single, concatenated, or merged journals as input.

- Produce and archive journals. You must maintain a complete record of update activity in the form of archived CCAJRNLs, in all types of Model 204 runs (Online, Batch, and RESTART recovery).

Note: In a z/VSE environment, the use of concatenated journals for regeneration is not supported.

- Run the UTILJ utility against any journal that does not have an end-of-file marker before using the MERGEJ utility. See Using the UTILJ utility.

- Merge any overlapping journals needed for a media recovery run by running the MERGEJ utility.

- If you are running media recovery, you must ensure that all files that were in deferred update mode during part or all of the time period you specify in the REGENERATE command are still in deferred update mode during the media recovery run. This might require larger deferred update data sets than those in the original run.

Recovery features

Model 204 protects the physical and logical consistency of files at all stages of processing using the following features.

Handling error diagnosis

Error diagnostic features, described in Error recovery features, ensure data integrity by command syntax checking, compiler diagnostics, error recovery routines for data transmission from user terminals, and maintaining and checking trailers on each table page.

Handling Transaction Back Out

Logical consistency of individual updates to a Model 204 file is protected by the Transaction Back Out facility, which is activated by the FRCVOPT and FOPT parameters. Transaction back out locks records until an update is completed. If the update is not completed, the effect of the incomplete transaction on file data is automatically reversed.

Handling a system recovery

Model 204 system recovery procedures involve the following functions:

| Facility | Description |

|---|---|

| Checkpoint facility | Logs preimages of pages, which are copies of pages before changes are applied, to the CHKPOINT data set. |

| Journal facility | Contains incremental changes that reflect updates to database files; see Tracking system activity (CCAJRNL, CCAAUDIT, CCAJLOG). |

| RESTART recovery | In the event of a system failure, the restart facility is invoked by the RESTART command. This invokes the ROLL BACK facility and, optionally, the ROLL FORWARD facility. |

| ROLL BACK facility | Rolls back all Model 204 files to a particular checkpoint — that is, undoes all of the changes applied to the files since the last checkpoint. |

| ROLL FORWARD facility | Rolls each file forward as far as possible — that is, reapplies all complete updates and committed transactions that were undone by the ROLL BACK facility. |

System recovery is initiated by the RESTART command, which invokes either the ROLL BACK facility, the ROLL FORWARD facility, or both.

In the event of a system failure, you can restore all files. Any Model 204 file that was being updated at the time of a system failure is flagged. Subsequently, whenever the file is opened, the following message is issued:

M204.1221: filename IS PHYSICALLY INCONSISTENT

Once recovery procedures are executed, any discrepancies in the files are corrected and the message is no longer issued.

Handling a media recovery

In the event of media or operational failure, you can restore individual files from previously backed-up versions. Restoration of the files and reapplication of the updates are invoked by the REGENERATE and REGENERATE ONEPASS commands.

When recovery procedures are completed, status reports are produced for each file participating in the recovery.

Understanding transaction back out

Transaction back out, also called TBO, protects the logical and physical consistency of individual transactions. Individual updates performed by SOUL statements or corresponding Host Language calls can be reversed automatically or explicitly, when:

- A request is canceled

- A file problem or runtime error is detected

- A user is restarted

- Requested by a SOUL or Host Language Interface program via the Backout statement or IFBOUT call respectively

Transaction back out is enabled on a file-by-file basis by specifications made on the FRCVOPT and FOPT file parameters.

Making updates permanent

A commit is the process of ending a transaction and making the transaction updates permanent. A commit is performed by issuing:

- COMMIT statement

- COMMIT RELEASE statement

- Host Language Interface IFCMMT call

Any event that ends a transaction such as end-of-request processing is an implicit commit.

Transaction boundaries

For SOUL updates, a transaction begins with the first evaluation of an updating statement in a given request. One request can execute multiple transactions.

SOUL transactions are ended by any one of the following events:

- A BACKOUT statement, the transaction is ended after the back out is complete

- A COMMIT statement

- A COMMIT RELEASE statement

- End of a subsystem procedure, unless overridden in the subsystem definition

- A CLOSE FILE command or any file updating command, such as RESET or REDEFINE, following an END MORE command

- Return to terminal command level using the END command or, for nested procedures, the end of the outermost procedure

In an application subsystem environment, also called Subsystem Management facility, you can make transactions span request boundaries by using the AUTO COMMIT=N option in the subsystem definition.

Once a transaction ends, another transaction begins with the next evaluation of an updating statement.

Updates that can be backed out

For files that have transaction back out enabled, you can back out all SOUL or Host Language Interface updates that are not yet committed. You can initiate back out only on active or incomplete transactions.

Updates that cannot be backed out

The updates that you cannot back out include:

- The following commands:

ASSIGN BROADCAST FILE CREATE DEASSIGN DEFINE DELETE DESECURE INITIALIZE REDEFINE RENAME RESET SECURE

- The following Host Language Interface calls:

IFDFLD IFDELF IFINIT IFNFLD IFRFLD IFRPRM IFSPRM

- Procedure definitions (PROCEDURE command)

- Procedure edits

- Any file update that has been committed

- Any update to a file that does not have transaction back out enabled

See the Rocket Model 204 Host Language Interface Reference Manual for a discussion of Host Language Interface transaction behavior.

Back out mechanism

Transaction back out logically reverses transactions by applying compensating updates to files participating in the transaction. For example, the logical reversal or back out of a STORE RECORD statement is equivalent to processing a DELETE RECORD statement.

Compensating updates for incomplete transactions are stored temporarily in a back out log in CCATEMP and then deleted when the transaction is committed or backed out.

Lock-pending-updates locking mechanism

Transaction back out utilizes an additional locking mechanism, called lock-pending-updates, to assure data integrity and transaction independence. A record in the process of being updated in one transaction is inaccessible by other transactions until the updating transaction commits or is backed out.

The lock-pending-updates (LPU) lock is separate from other record locks and can be enabled without transaction back out. The LPU lock is:

- Always exclusive

- Placed on each record as soon as it is updated by a transaction

- Held until the transaction commits

- Released by:

- SOUL BACKOUT, COMMIT, and COMMIT RELEASE statements

- Host Language Interface IFBOUT, IFCMMT, and IFCMTR calls

- All implied commits or automatic back outs of the transaction

Note: The SOUL RELEASE RECORDS statement does not release the LPU lock.

Back out and constraint logs

A back out log of compensating updates and a constraints log, which assures availability of resources freed by active transactions, are built in CCATEMP and freed when the update ends or is backed out. Sufficient storage space must be allocated for CCATEMP to accommodate the back out and constraints logs. Inadequate space can terminate the run.

Understanding the back out log

All pages not used by any other operation in CCATEMP can be used for back out logging. A minimum of one page is required.

The number of pages needed in CCATEMP depends on the number of concurrent transactions and the size of each transaction. More information about calculating CCATEMP space is given in Sizing CCATEMP.Sizing CCATEMP.

Rocket Software recommends that you keep transactions short with frequent COMMIT statements. Short transactions minimize the CCATEMP space used, allow more frequent checkpoints, reduce record contention from lock-pending-updates locking, and reduce overhead for all updating users by keeping the back out and constraints logs small.

Understanding the constraints log

The constraints log is a dynamic hashed database that resides in a few virtual storage pages with any extra space needed residing in CCATEMP. In this database there are primary pages and overflow pages, also called extension records. When a primary page fills up, additional records go on to overflow pages that are chained from the primary page. When a primary page become too full, a process is evoked which splits the page into two pages. This process continues to the maximum-allowed number of primary pages at which point the splitting process stops and the overflow page chain is allowed to grow as large as required.

As the constraints database contracts, this process is reversed. Those pages with only a few records are merged together or compacted. This process continues until only one page remains.

The resources freed by User Language statements, such as Table B space, record numbers, and Table C hash cells, cannot be reused by other active transactions until the transaction that freed the space ends. Before constrained resources can be used, any new transactions needing those resources must search the constraints log.

The constraints log uses specially formatted pages with approximately 300 records per page. CCATEMP space considerations for constraint logging are the same as for logged back out images, which is described in Understanding the back out log.

All CCATEMP pages not used by any other operation are available for constraints logging. A minimum of one page is required.

Optimizing the constraints log performance

The parameters CDMINP2X and CDMAXP2X optimize the constraints log performance. The constraints log is used in transaction back out. The constraints log uses several pages that are permanently allocated in storage; when those pages become full, additional CCATEMP pages are allocated.

The number of permanently allocated pages is controlled by the CDMINP2X parameter. Allocating more pages in storage saves time, because disk read-writes are not necessary and CPU time spent accessing pages is lower.

Note: However, the permanent allocation of too many pages (thousands) may waste the virtual storage, because unused pages may not be reused while unused CCATEMP pages may be reused for other purposes.

Model 204 uses the constraints log efficiently by merging or splitting pages to keep amount of data per page within certain limits. This saves space used by the constraints log, but requires additional CPU time. The CDMAXP2X parameter specifies the maximum number of pages to keep in compacted form.

Note: When the number of pages used in the constraints log exceeds CDMAXP2X setting, additional pages are not compacted. Compaction saves space and in some cases CPU time for finding records in the constraints log, but requires additional CPU time for the compaction itself. Using too many compacted pages (thousands) may negatively affect performance.

Disabling transaction back out

By default, transaction back out is enabled for all Model 204 database files.

You can disable transaction back out and lock-pending-updates using the following file parameter settings.

| To disable... | Set... |

|---|---|

| Back out mechanism | FRCVOPT X'08' bit on |

| Lock-pending-updates | FOPT X'02' bit on |

You can specify these settings when the file is created or with the RESET command.

Because lock-pending-updates are required for transaction back out, setting the FOPT X'02' bit on automatically turns on the FRCVOPT X'08' bit.

Disadvantages to disabling transaction back out

The following considerations apply to transaction back out:

- If transaction back out is disabled, you cannot back out updates.

- Transaction back out provides a mechanism for recovery from file-full conditions.

- You cannot define fields with the UNIQUE and NON-UNIQUE attributes or AT MOST ONE attribute in files that do not have transaction back out enabled.

ROLL BACK facility

The ROLL BACK facility is activated by the ROLL BACK argument of the RESTART command. ROLL BACK processing removes changes applied to files by restoring copies of file pages as they existed before updates, called preimages, from the CHKPOINT data stream. All preimages logged after the last or specified checkpoint are restored.

The ROLL BACK facility, in conjunction with the ROLL FORWARD facility is used for recovery of the entire database when:

- Abnormal termination occurs in Online, Batch 204, IFAM1 or IFAM4

Note: For IFAM1 only the ROLL BACK facility is available; the ROLL FORWARD facility is not supported for IFAM1

- Operating system or partition abends

ROLL BACK processing

The CHKPOINT stream created in the failed run is processed as input and called the RESTART stream during ROLL BACK processing, which consists of two passes through the RESTART stream.

For maximum efficiency, CCATEMP should have at least 33 pages.

ROLL BACK processing, Pass 1

In Pass 1, ROLL BACK processing scans the RESTART stream from the beginning, looking for the last checkpoint taken in the failed Online or for the checkpoint, if specified, in the RESTART command.

For each file in deferred update mode, the deferred update stream is opened and repositioned at the appropriate checkpoint record.

- The system builds a directory of files opened in deferred update mode or changed after the specified checkpoint. IDs of gaps in recovery information of a particular file (discontinuities) that occurred after the last checkpoint are also noted in the file directory.

A discontinuity is an event that overrides all other file update events and cannot be rolled across. For example, if you add two new records to a file, close the file, and then issue a CREATE command on that file, it does not matter that new records were added. They were destroyed when the file was created. If you run recovery across this discontinuity, recovery notices the discontinuity and does not apply the two new records to the file. Recovery ignores them as useless.

The following processes cause discontinuities: CREATE FILE, CREATEG, INITIALIZE, a file updated by a second job, RESET FISTAT, RESTORE, RESTOREG, RESET FRCVOPT, REGENERATE, ROLL BACK, ROLL FORWARD, and system initialization.

- When the end of the checkpoint stream is reached, the files named in the file directory are opened. The NFILES and NDIR parameter settings must be large enough to accommodate these opens.

- After the files and deferred update streams are opened, a list of files that you cannot recover and a list of files that you can roll back are displayed on the operator's console.

- Files that cannot be recovered will be listed following the message:

M204.0145: THE FOLLOWING FILES CANNOT BE RECOVERED

This table lists the possible reasons:

Reason for failure Meaning DEFERRED UPDATE CHKP MISSING

The scan of the deferred update data set did not locate the checkpoint used for ROLL BACK processing.

DEFERRED UPDATE READ ERROR

The scan of the deferred update data set cannot be completed because of a read error.

DEFERRED UPDATES MISSING

Deferred update data set cannot be opened.

MISSING

File cannot be opened.

ROLL BACK INFORMATION IS OBSOLETE

File was modified by a Model 204 job after the last update in the run being recovered.

IS ON A READ-ONLY DEVICE

(CMS environment only) File is on a device for which Model 204 has read-only access.

- If a list of files that cannot be recovered is displayed, the ERROR clause of the ROLL BACK portion of the RESTART command is examined:

Error clause Means ERROR CONTINUE The listed files are ignored and recovery proceeds for other files. ERROR STOP The recovery run terminates. Errors can be corrected and recovery run again. ERROR OPERATOR or

no error clauseThe message SHOULD RESTART CONTINUE?is displayed on the operator console.- Yes (

Y) response has the same effect as an ERROR CONTINUE clause. - No (

N) response has the same effect as an ERROR STOP clause.

- Yes (

- After the list of files that you can roll back is displayed, you can open any unlisted files for retrieval.

ROLL BACK processing, Pass 2

In Pass 2, ROLL BACK processing reapplies page images to the files by reading the CHKPOINT stream backwards.

ROLL BACK processing stops at the specified checkpoint and notifies the operator. Files with discontinuities are not rolled back beyond the discontinuity. If ROLL FORWARD processing is not used, the files are closed and made available for general use.

ROLL FORWARD facility

If ROLL BACK processing was successful, the ROLL FORWARD facility is activated by the ROLL FORWARD argument of the RESTART command. ROLL FORWARD processing reapplies the file updates from the recovery data set CCARF to files rolled back by ROLL BACK processing. CCARF is the journal file, CCAJRNL, from the run being recovered.

If ROLL BACK processing is successful, the CCARF journal stream (CCAJRNL from the run being recovered) is opened.

The ROLL FORWARD facility is available for Online, Batch 204, and IFAM4 jobs.

RESTART ROLL FORWARD recovery is version specific

ROLL FORWARD recovery is not compatible with Model 204 journals from previous releases. If you attempt ROLL FORWARD processing using a journal created under a previous release, processing is terminated with the following error:

M204.2501: RELEASE INCOMPATABILITY

ROLL BACK processing remains compatible with previous releases. Rocket Software recommends that you backup all files prior to installing the current release. Also, since the format of all update journal records is now eight bytes larger than in previous releases, the journal may need additional space.

The amount of data lost due to a system crash is minimized by the journal buffer being forced out upon the completion of every update unit (COMMIT). An update unit is a sequence of operations allowed to update the database.

After the operator is notified that ROLL FORWARD processing is complete, terminal users can open the recovered files for updating.

Logging ROLL FORWARD processing

To enable ROLL FORWARD logging in the Online, the following requirements must be met:

- CCAJRNL output stream must be present

- SYSOPT setting must include the 128 option

- ROLL FORWARD logging option must be set on the RCVOPT parameter (X'08')

- CHKP and JRIO object modules must be present in the link-edit of the Model 204 program to be run

- To control ROLL FORWARD logging on a file basis, use the FRCVOPT file parameter

Message for the Operator

While ROLL FORWARD is processing, the following message is issued:

M204.1992: RECOVERY: PROCESSING ROLL FORWARD BLOCK# blocknumber date-timestamp

The message gives the processing sequence number and date-time stamp. It is issued at the Operator's console for each occurrence of an hour change in the date-time stamp of the CCARF update records.

You could receive a maximum of 24 such messages in a 24-hour period.

ROLL FORWARD processing

ROLL FORWARD processing consists of one pass of CCARF and performs the following steps:

- Reads the journal stream, CCARF, starting from the ROLL BACK checkpoint. For each file you are recovering, ROLL BACK processing determines the start points for rolling the file forward.

The start point is normally the last checkpoint. However, if a discontinuity is detected for the file between the last checkpoint and the end of CCARF, the start point is just after the last discontinuity record.

- Maintains transaction and constraints logs for each transaction as it is reapplied.

- Builds a Transaction Control Block for each concurrent transaction in the original run to keep track of each transaction back out and constraints log. This allows ROLL FORWARD processing to back out transactions that were backed out in the original run.

- Issues messages indicating what actions are taken for all recovered files, as the CCARF data set is processed.

- Reapplies file updates from the starting point in each file.

- Closes recovered files.

- Closes CCARF.

- Takes a new checkpoint and allows new updates to begin.

- Issues the following message:

*** RECOVERY IS NOW COMPLETE ***

ROLL FORWARD file types

For system recovery purposes, Model 204 files can be categorized according to the processing algorithm applied to determine the stopping point of the ROLL FORWARD processing. Each file type is handled differently during the roll forward phase of recovery.The ROLL FORWARD algorithm is determined by the specified file recovery option, FRCVOPT:

- Roll-forward-all-the-way files (FRCVOPT=X'09')

- Recovery of roll-forward-all-the-way files ignores update unit boundaries. For these files, ROLL FORWARD processing applies all individual updates that have been logged to CCAJRNL. This minimizes the loss of work during ROLL FORWARD processing by sacrificing logical consistency.

- Transaction back out files (FRCVOPT X'08' bit off)

-

For transaction back out files, ROLL FORWARD processing initially reapplies all individual updates that have been logged to the journal, just as it does for roll-forward-all-the-way files. When the end of the journal is reached, any incomplete transactions are backed out.

Because the lock pending updates mechanism acquires an exclusive enqueue on records updated by a transaction until the transaction either commits or is backed out, concurrent transactions running against the same files are logically independent of one another.

Journal data set in ROLL FORWARD logging

The journal data set is used as the log for ROLL FORWARD processing. If ROLL FORWARD logging is active for a file (based on the values of the RCVOPT system parameter and the FRCVOPT file parameter), each change made to the file is logged at the time it is made. In addition to the change entries, important events such as checkpoints and discontinuities are also logged on the journal. Along with update unit boundaries, detailed in Update units and transactions, these special markers are used to determine where the process of rolling forward should begin and end for each file.

The journal buffer is written out to disk or tape by the completion of every update unit that corresponds to a User Language request or host language program. This minimizes the amount of ROLL FORWARD data that can be lost during a system crash, and ensures that the commit of a transaction is always reflected on disk, in the CCAJRNL data set, at the time of the commit.

File discontinuities

The ability to share files between two distinct copies of Model 204 can create an operational problem. If correct operating procedures are not followed, the use of checkpoint and roll forward facilities might result in a loss of file integrity under certain circumstances.

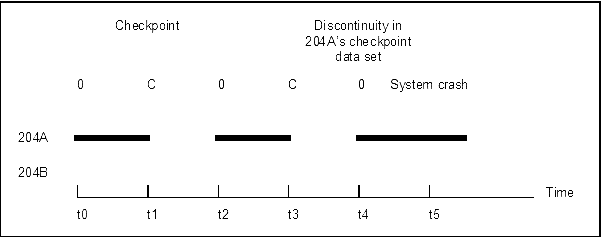

For example, suppose that one file is updated by two or more jobs. In "Checkpoint data set discontinuity," below:

- 204A is an Online job that has file A opened for update at points t0, t2, and t4 on the chart.

- 204B is a batch job submitted to update file A.

Checkpoint data set discontinuity

Suppose that a system crash occurs at t5 and the most recent checkpoint occurred at t1. File A can be rolled back to t4; at this point, physical consistency is guaranteed, because the file was just opened. File A cannot be rolled back any further than t4, because older preimages might partially, but not completely, undo updates from 204B. There is a gap, or discontinuity, in 204A's checkpoint data set that prevents rolling back file A beyond t4.

Resolving discontinuities

To resolve the problem presented by this discontinuity, Model 204 writes a discontinuity record on the checkpoint data set when OPEN processing recognizes that another job has updated the file. Model 204 does not roll back changes to a file across a discontinuity record.

In "Checkpoint data set discontinuity" above, if a crash occurs at t5, Model 204 restarts by processing job 204A's checkpoint data set from beginning to end and then reverses to restore preimages. On this reverse pass, file A is restored to its state at t4; the discontinuity record stops file A from being rolled back to the checkpoint at t1. All other files are rolled back to the checkpoint at t1.

Resolving logical inconsistencies

When files are rolled back to different points, the possibility exists for logical inconsistencies to appear in the database. Model 204 provides an option that prevents a second job (204B in the example) from updating a file that already has been updated by another job that is still running (204A). Through enqueuing, 204A retains shared control of file A even when file A is closed. 204B can open file A for retrievals but not updates. Use the FRCVOPT parameter to indicate whether the file is held or released when it is closed.

How discontinuities occur

Besides the two-job update case just described, a discontinuity is produced by any operation that does not write a complete set of preimages for the pages it updates. Several commands, including CREATE, INITIALIZE, REGENERATE, and RESTORE, cause discontinuities. Some of these commands rewrite the entire file, making preimages impractical, or, in the case of CREATE, impossible. The REGENERATE command logs a discontinuity record, because roll forward logging is automatically turned off during media recovery.

Understanding update units

An update unit is any sequence of operations that is allowed to update one or more files. See Update units and transactions for a complete description of update units. Update units include update operations that can be backed out (back out update units or transactions) and update operations that cannot be backed out. Each update unit is assigned a unique ID by the system. This update ID is written to the audit trail when the update unit starts and ends. See Reporting facilities for the text of the messages issued to the audit trail at these times.

Update unit boundaries

The boundaries of a complete update unit depend on a number factors: whether or not the update unit can be backed out, the updating commands or statements involved, the kinds of operations and/or situations involved in the update, and special events like hard restarts or request cancellations. A detailed listing of the beginnings and ends of update units is found in Using HLI and batch configurations.

Determining the starting point for ROLL FORWARD processing

Normally, ROLL FORWARD processing begins reapplying updates at the checkpoint selected by ROLL BACK processing. However, if a discontinuity occurred for a file between the last checkpoint and the time of the crash (see File discontinuities), the file cannot be rolled back to the checkpoint. ROLL FORWARD processing cannot reapply any updates to the file between the checkpoint and the discontinuity, although updates that occurred after the discontinuity can be reapplied. Simply stated, ROLL FORWARD processing starts where ROLL BACK processing stopped for each file.

ROLL FORWARD processing and user hard restarts

Restarts that occur during active transactions cause a request cancellation and can optionally cause a back out. A user hard restart occurs when a serious error is encountered during the processing of a user's request or command. Such errors include physical I/O errors in a file or physical inconsistencies in the database. The user is logged out and all files are closed. Those files are marked physically inconsistent. They cannot be used again until you clear the condition.

Repairing physically inconsistent files

Most of the methods for fixing a physically inconsistent, broken file, such as using the INITIALIZE command or running a batch job, produce a discontinuity. One method, resetting the physically inconsistent flag in FISTAT, actually does not fix the file but does make it available for use, presumably so that its contents can be dumped in some form for reloading. To extract the contents of a broken file successfully, you might need to make further changes to the file to avoid the broken areas.

Update units and hard restarts

A hard restart normally leaves an update unit that cannot be backed out incomplete. (Restart during a transaction can optionally cause the transaction to be backed out.) If the system crashes after a hard restart but before RESTART recovery is initiated. the restarted update unit is used as the ROLL FORWARD stopping point for the files involved. If one or more of the files is cleared by a discontinuity before the system crash, that file is no longer part of the restarted update unit. Nothing is reapplied to the file until after the discontinuity. For files that are not cleared, the restarted update unit remains incomplete.

Back outs during ROLL FORWARD processing

For transaction back out files, a transaction can be backed out during ROLL FORWARD processing for two reasons:

- Transactions that are backed out in the original run are also backed out during ROLL FORWARD processing. In the original run, individual updates are logged to the journal as they occur. If the transaction is backed out instead of committed, this is reflected in the journal. During ROLL FORWARD processing, the individual updates are reapplied based on the journal entries logged for them by the original run. If ROLL FORWARD processing encounters a back out record on the journal, it proceeds to back out all the individual updates it reapplied since the transaction began.

- Transactions that are still incomplete (that is, uncommitted at the time of the crash) when the end of the journal is reached are automatically backed out by ROLL FORWARD processing.

Logging ROLL FORWARD processing

To accomplish transaction back outs during ROLL FORWARD processing, Model 204 maintains transaction back out and constraint logs for each active transaction during Pass 2 of ROLL FORWARD processing. ROLL FORWARD transaction back out and constraint logs are built in CCATEMP, just as they were in the original run. Thus, ROLL FORWARD processing requires as much CCATEMP space for transaction back out and constraint logging as the original run. Insufficient CCATEMP space terminates recovery.

In addition, ROLL FORWARD processing builds a special transaction control block for each possible concurrent transaction in the original run to keep track of each transaction's transaction back out and constraint log. This requires that additional storage be allocated during Pass 2 of ROLL FORWARD processing. Add this storage to SPCORE for recovery runs. The formula for calculating this additional storage is:

16 + NUSERS * 48 + (NFILES/8 rounded up to the nearest multiple of 4) = bytes of additional storage required

Where:

NUSERS and NFILES are taken from the original run.

For example, recovery of a Model 204 run with NUSERS=100 and NFILES=50 requires allocation of the following additional bytes of storage during ROLL FORWARD, Pass 2:

16 + 100(48 + 50/8 rounded up to the nearest multiple of 4) = 5616

RESTART recovery considerations for sub-transaction checkpoints

If you are recovering a job that was using sub-transaction checkpoints, the RESTART process is going to need all the checkpoint information from that job. Therefore, in addition to the RESTART data set definition for the CHKPOINT data set from the failed job, you must add a RESTARTS data set definition that points to the CHKPNTS data set from the failed job.

If you run RESTART recovery as a single-user job step ahead of your Online, do not add CPTYPE=1. The recognition of a RESTARTS data set is the indicator that the CPTYPE=1 job is being recovered.

Considering Roll Back and Roll Forward

- ROLL BACK

When RESTART rolls back to a sub-transaction checkpoint it then backs out any updates that had not committed when the sub-transaction checkpoint was taken.

- ROLL FORWARD

The roll forward process is the same as rolling forward from a transaction checkpoint.

- Running ROLL BACK and ROLL FORWARD as separate steps.

You can run a RESTART ROLL BACK and subsequently request just a RESTART ROLL FORWARD, however, in the case of sub-transaction checkpoint the ROLL BACK process will always occur even if you previously rolled back.

For RESTART ROLL BACK only processing

RESTART ROLL BACK processing requires RCVOPT=8, even if ROLL FORWARD is not specified. Roll back only to a sub-transaction checkpoint is available, so this type of recovery requires journaling of information for subsequent secondary recovery, it needed. In addition, GDGs are supported for CHKPOINT during recovery, which allows for unlimited size recovery checkpoints.

If at CHKPOINT open during RESTART ROLL BACK processing there is no CHKPOINT defined for the job, the job displays the following message and waits for a response from the operator.

OO /// UNABLE TO OPEN CHKPOINT, REPLY RETRY OR CANCEL

Restarting after a system failure

To restart Model 204 or an IFAM4 application after a system failure issue a RESTART command that is the first command immediately following the last user (IODEV) parameter line in the CCAIN input stream. The ROLL BACK and ROLL FORWARD arguments activate the ROLL BACK and ROLL FORWARD facilities used for file recovery.

The following considerations apply to using the RESTART command:

- The checkpoint ID of the TO clause in the RESTART command must correspond exactly to the checkpoint ID displayed in a CHECKPOINT COMPLETED message from the Model 204 run being recovered. The option to specify the checkpoint is useful in an environment in which checkpoints are coordinated with Host Language Interface program checkpoints.

- Files broken as a consequence of a user hard restart can be fixed by rolling back to a checkpoint prior to the current RESTART command.

If the ROLL FORWARD option is also requested, the recovered file contains all the updates completed before the user restart. This method of fixing files requires running RESTART recovery after the end of the run that broke the files and before the files are updated by any other run.

- To run the ROLL FORWARD facility, sufficient space must be available in spare core, SPCORE, for special control blocks that are allocated when ROLL FORWARD processing begins and freed when it is completed.

One other block is allocated, with a size given by:

16 + (36 * NUSERS)

where the value of NUSERS is from the run that crashed, not from the recovery run.

Reporting recovery status

The final status of each file that participated in recovery is reported at the conclusion of recovery processing, when you issue the STATUS command. The effects of the most recent recovery on an individual file are reported when the file is opened with update privileges.

Reporting facilities

Update unit ID numbers and audit trail messages help you determine exactly which updates were being made when a system crash occurred and which updates were not reapplied by RESTART recovery.

UPDTID user parameter

The view-only, user parameter, UPDTID, lets applications determine the current update unit number. The update unit number is a sequential number that provides a unique identifier for each update unit in a Model 204 run. The update ID is incremented for each update unit. When an update unit is active, UPDTID contains the update ID, otherwise it contains zero. Applications can use this parameter to keep track of the most recent update unit started by each user.

Audit trail messages

At the beginning and end of each update unit, The following messages are written to the audit trail:

*** M204.0173: START OF UPDATE n AT hh:mm:ss.th

and:

*** M204.0172: END OF UPDATE n AT hh:mm:ss.th

Message M204.0173 is issued for every update unit. The default destination of both messages is the audit trail. The system manager can use the MSGCTL command to change the destination of these messages.

Reporting ROLL FORWARD processing

Model 204 lists each update unit that ROLL FORWARD processing automatically backed out or partially reapplied, because the update unit was incomplete when the end of the journal was reached. The message is in the following format:

*** M204.1200: ROLL FORWARD {BACKED OUT | PARTIALLY REAPPLIED} UPDATE n FOR THE FOLLOWING FILES:

Where:

n is the update ID.

A second message lists the files updated by the update unit:

*** M204.1214: filename

Final status of files affected by RESTART recovery

For files that are rolled back and not rolled forward a message in the following format is issued:

*** M204.0621: ROLLED BACK TO {CHECKPOINT | DISCONTINUITY} OF day month

For each file rolled forward, either or both of the following messages is issued:

*** M204.0622: UPDATE n OF day month year hh:mm:ss.th WAS HIGHEST UPDATE FULLY REAPPLIED TO filename BY [ROLL FORWARD | REGENERATE] *** M204.0622: UPDATE n OF day month year hh:mm:ss.th WAS LOWEST UPDATE {BACKED OUT | PARTIALLY REAPPLIED} TO filename BY [ROLL FORWARD | REGENERATE]

Where:

n is the update ID.

Note: For update units backed out or partially reapplied, only the lowest update ID is reported. A complete list of such update units for a particular file can be obtained from the message M204.1200 described above. Those messages are available only in the recovery run, CCAAUDIT.

Opening files and their status reports

At the end of recovery, the X'10' bit of the FISTAT parameter is set to indicate that the file was recovered. Subsequently, when you open a file, messages report the effects of the most recent RESTART recovery, until you reset FISTAT to X'00'. The status reported during open processing is as follows:

*** M204.1203: filename WAS LAST UPDATED ON day month year hh:mm:ss.th *** M204.1238: RECOVERY OF filename WAS LAST REQUIRED ON day month year hh:mm:ss.th *** M204.0621: ROLL BACK TO {CHECKPOINT | DISCONTINUITY} OF day month year hh:mm:ss.th

or:

*** M204.0622: UPDATE n OF day month year hh:mm:ss.th WAS {HIGHEST | LOWEST} UPDATE {FULLY REAPPLIED | BACKED OUT | PARTIALLY REAPPLIED} TO filename BY ROLL FORWARD

Suppressing status reporting

You can suppress recovery status messages when files are opened by resetting the X `10' bit of the FISTAT file parameter to zero with the RESET command.

Requesting status reports

Use the STATUS command to obtain reports on the recovery status of a file in the same job that executes the RESTART or REGENERATE command.

The command is issued in the following form and applies to all operating systems:

STATUS filename

where filename identifies a file opened during the current run by a user or a RESTART command.

If a user with system manager or system administrator privileges issues the STATUS command, you can omit the file name to list status information for all files opened during the run.

One or two lines of information are listed for each file, in the form:

filename: current-status RESTART-status

Where:

- current-status indicates special conditions that can affect discontinuities and future recovery of the file.

The values of current status are CLOSED, DEFERRED, ENQUEUED, OPEN, and UPDATED.

- RESTART-status indicates a file that participated in recovery in the current run.

The values of RESTART-status are listed in the following table:

RESTART command status values Status Description BEING RECOVERED

The file is in the recovery process when the STATUS command is issued.

NOT RECOVERED

The file cannot be opened until the RESTART command ends. One of the following reasons is given:

DEFERRED UPDATE CHKP MISSING

The scan of the deferred update stream did not locate the checkpoint used for ROLL BACK processing

DEFERRED UPDATE READ ERROR

The scan of the deferred update stream not completed because of a read error.

DEFERRED UPDATES MISSING

The deferred update stream could not be opened.

MISSING

The file could not be opened.

OBSOLETE

The file was updated by a Model 204 job after the last update in the run being recovered.

IS ON A READ-ONLY DEVICE

The file is on a device for which Model 204 has read-only access.

ROLLED BACK TO CHECKPOINT...

The file was rolled back to the checkpoint taken at the given date and time. The file was not rolled forward.

ROLLED BACK TO DISCONTINUITY...

The file was rolled back to the discontinuity occurring at the given date and time. The file was not rolled forward. ROLLED FORWARD TO UPDATE...

The file was rolled forward. The last update reapplied by roll forward had the specified ID and originally ended at the given date and time. REGENERATED TO CHECKPOINT...

The file was recovered using media recovery (REGENERATE). Updates were reapplied up to the time of the checkpoint given. REGENERATED TO UPDATE...

The file was recovered using media recovery (REGENERATE). Updates were reapplied up to and including the specified update.

Recovery data sets and job control

Data sets required for RESTART recovery

The data sets required for z/OS, z/VM, and z/VSE for RESTART recovery are listed in the following table:

| Model 204 file name | Required... |

|---|---|

| CCAJRNL | When running RESTART recovery as a separate job step (recommended), CCAJRNL is not required, unless FRCVOPT=8.

When running RESTART recovery in the same step that brings up the Online (not recommended), CCAJRNL is required for logging all updates (RCVOPT=8) that will occur in the Online. |

| CCARF | When restarting the system. For ROLL FORWARD processing, the CCARF stream must be the same as the CCAJRNL stream from the run being recovered. |

| CHKPOINT | For taking checkpoints (RCVOPT=1) and for logging preimages of updated file pages. |

| RESTART | When restarting the system. For ROLL BACK processing, the RESTART stream must be the same as the CHKPOINT stream of the run being recovered.

(For z/VSE only) The RESTART file is used as a direct access file. DLBL code DA must be specified. |

Sample z/OS JCL for a recovery run

The JCL for the original run specifies the following data sets:

//CHKPOINT DD DSN=M204.CHKP1,DISP=OLD //CCAJRNL DD DSN=M204.JRNL1,DISP=OLD

The JCL of the recovery run with roll forward is as follows:

//RESTART DD DSN=M204.CHKP1,DISP=OLD //CCARF DD DSN=M204.JRNL1,DISP=OLD //CHKPOINT DD DSN=M204.CHKP2,DISP=OLD //CCAJRNL DD DSN=M204.JRNL2,DISP=OLD

Note: When performing a RESTART recovery, the RESTART and CCARF streams must be provided as input to an ONLINE or BATCH204 configuration.

The RESTART stream is the CHKPOINT stream from the run being recovered. Although created as a sequential output file, the RESTART stream is treated as a direct access file if on disk.

You must provide a new CHKPOINT stream for checkpoint output generated during the restart process. CCAJRNL is optional in a RESTART-only job step with NUSERS=1.

Sample z/VSE JCL for recovery

The following considerations apply to the required JCL statements in a z/VSE environment:

- If checkpoint and journal data sets are disk resident, they are used as sequential output files that do not require allocation with the ALLOCATE utility.

- You must provide DLBL and EXTENT statements for any recovery or restart streams that are disk resident.

- ASSGN statements might be necessary.

- TLBL statement is required instead of DLBL and EXTENT statements if magnetic tape is substituted for a disk file:

- The DOS file name specified on the TLBL statement must take the form of a programmer logical unit number (SYSnnn) and must be the same as the programmer logical unit number on the ASSGN statement for the tape drive used as the input or output device.

- You must specify the programmer logical unit number in the FILENAME parameter of a DEFINE DATASET command for the corresponding Model 204 internal file name.

- The DEFINE DATASET commands for recovery or restart files on tape must precede the User 0 parameter line in the CCAIN input stream. Each file must be referred to by its Model 204 internal name.

- When recovering Model 204 with RESTART processing, you must provide the RESTART and CCARF streams as input to an ONLINE or BATCH204 configuration.

- The RESTART stream is a CHKPNT stream from the run being recovered. Although created as a sequential output file, the RESTART stream is treated as a direct access file if on disk.

- You must provide a new CHKPNT stream for checkpoint output generated during the restart process. CCAJRNL is optional in a RESTART-only job step with NUSERS=1.

- The lack of a DLBL statement with DA specified in the CODES parameter results in an error message from z/VSE during the processing of the RESTART command.

- The following message results from treating the CHKPNT stream as a direct access file when it is used as input to the restart process:

M204.0139: EOF ASSUMED IN FIRST PASS OF RESTART DATASET

You can ignore the message.

z/VSE example 1: checkpoint and journal files on disk (FBA)

The number of blocks and tracks used in this example are arbitrary values.

//JOB MODEL204 . . . // DLBL CHKPNT,`MODEL204.CHKPOINT.FILE',99/365,SD // EXTENT SYS021,SYSWK1,,,1000,13000 // ASSGN SYS021,DISK,VOL=SYSWK1,SHR // DLBL CCAJRNL,`MODEL204.JOURNAL.FILE.1',99/365,SD // EXTENT SYS021,SYSWK1,,,14000,13000 . . . // EXEC ONLINE, SIZE=AUTO PAGESZ=6184,RCVOPT=9,... . . /* /&

z/VSE example 2: checkpoint and journal files on tape

// JOB MODEL204 . . // TLBL SYS041,`M204.CHKPOINT',99/365 // ASSGN SYS041,TAPE // TLBL SYS040,`M204.JOURNAL',99/365 // ASSGN SYS040,TAPE . . // EXEC ONLINE,SIZE=AUTO DEFINE DATASET CCAJRNL WITH SCOPE=SYSTEM FILENAME=SYS040 DEFINE DATASET CHKPNT WITH SCOPE=SYSTEM FILENAME=SYS041 PAGESZ=6184,RCVOPT=9,... . . /* /&

z/VSE example 3: recovery-restart from disk

In the following example, all files are on disk. The checkpoint, journal, and restart streams are on FBA devices. The ROLL FORWARD stream is on a CKD device. The number of blocks and tracks are arbitrary values.

// JOB MODEL204 RESTART . . // DLBL RESTART,'MODEL204.CHKPOINT.FILE',,DA // EXTENT SYS021,SYSWK1,,,1000,13000 // ASSGN SYS021,DISK,VOL=SYSWK1,SHR // DLBL CHKPNT,'MODEL204.CHKPOINT.FILE.2',99/365,SD // EXTENT SYS023,SYSWK2,,,2000,13000 // ASSGN SYS023,DISK,VOL=SYSWK3,SHR // DLBL CCAJRNL,'MODEL204.JOURNAL.FILE.3',99/365,SD // EXTENT SYS023,SYSWK3,,,13000,13000 // DLBL CCARF,'MODEL204.JOURNAL.FILE.2',99/365,SD // EXTENT SYS022,SYSWK2,,,30,300 // ASSGN SYS022,DISK,VOL=SYSWK2,SHR . . // EXEC ONLINE,SIZE=AUTO PAGESZ=6184,RCVOPT=9,... . . RESTART ROLL BACK ERROR STOP ROLL FORWARD /* /& PAGESZ=6184

z/VSE example 4: recovery-restart from tape

The following example illustrates checkpoint and journal streams on disk, and restart and roll forward streams on magnetic tape. Checkpoint and journal streams are on FBA devices.

// JOB MODEL204 . . // DLBL CHKPNT,'MODEL204.CHKPOINT.FILE',99/365/SD // EXTENT SYS021,SYSWK1,,,1000,13000 // ASSGN SYS021,DISK,VOL=SYSWK1,SHR // DLBL CCAJRNL,'MODEL204.JOURNAL.FILE.1',99/365,SD // EXTENT SYS023,SYSWK2,,,11000,13000 // ASSGN SYS023,DISK,VOL=SYSWK3,SHR // TLBL SYS040,M204.JOURNAL.2' // ASSGN SYS040,TAPE // TLBL SYS041,'M204.CHKPOINT' // ASSGN SYS041,TAPE . . // EXEC ONLINE,SIZE=AUTO DEFINE DATASET CCARF WITH SCOPE=SYSTEM FILENAME=SYS040 DEFINE DATASET RESTART WITH SCOPE=SYSTEM FILENAME=SYS041 PAGESZ=6184,RCVOPT=9,... . . RESTART ROLL BACK ERROR STOP ROLL FORWARD /* /&

Note: Model 204 files for which the DEFINE DATASET command was used to relate the Model 204 internal file name to a z/VSE file name can be recovered with the RESTART facility, only if the DEFINE DATASET command precedes the User 0 parameter line in the CCAIN input stream.

Statements required for z/VM recovery

Use the following FILEDEF statements in the Online run for which you want to provide recovery:

FILEDEF CHKPOINT mode DSN M204 chkp1 FILEDEF CCAJRNL mode DSN M204 jrnl1

The following FILEDEF statements are required for a recovery run requesting ROLL BACK and ROLL FORWARD processing:

FILEDEF RESTART mode DSN m204 chkp1 FILEDEF CCARF mode DSN m204 jrnl1 FILEDEF CHKPOINT mode DSN m204 chkp2 FILEDEF CCAJRNL mode DSN m204 jrnl2

The following considerations apply:

- Do not store CCAJRNL and CHKPOINT on CMS minidisks. If the service machine crashes, CMS data sets are lost.

- Because CMS does not support read-backward from tape, you cannot use a tape checkpoint data set as the restart data set during recovery.

- Checkpoint and journal data set sequencing in the ONLINE EXEC can allow for multiple generations of recovery data sets. Modify FILEDEFs for journal and checkpoint. Add FILEDEFs for the old journal and checkpoint, as shown in the following example.

z/VM recovery examples

- The statements below first determine the last set of CHKPOINT and CCAJRNL files used and then increment the next set by 1.

LISTFILE RESTART SEQ* A (EXEC EXEC CMS &STACK &READ VARS &FN &FT &FM &SEQ=&&SUBSTR &FT 4

- The following statements calculate the next sequence number, assuming five sets of recovery data sets:

&SEQX=&SEQ &SEQ =&SEQ+1 &IF &SEQ GT 5 &SEQ=1

- This statement creates a recovery control data set from the service machine:

XEDIT RESTART SEQ0

- Insert this line and file the data set:

*** DO NOT DELETE THIS DATA SET, IT IS ESSENTIAL TO MODEL 204 RECOVERY

- Rename the control file for the next run and enter the FILEDEFs, as follows:

RENAME &FN &FT &FM = SEQ &SEQ FILEDEF CCAJRNL M DSN M204 CCAJRNL SEQ&SEQ FILEDEF CCARF M DSN M204 CCAJRNL SEQ&SEQX FILEDEF CHKPOINT M DSN M204 CHKPOINT SEQ&SEQ FILEDEF RESTART M DSN M204 CHKPOINT SEQ&SEQX

Handling recovery failures

Recovering from failure during recovery is possible only if the cause of the original failure is corrected. A serious error encountered during RESTART recovery results in a message describing the error, followed by a message indicating that the run is aborted.

Determining the cause of the error

When a recovery run aborts, the cause of the error can be determined by examining the job step return code and messages written to CCAAUDIT:

- If the RESTART command does not complete normally and the Model 204 run stays up, the return code is 52.

- If the Model 204 run is terminated during evaluation of RESTART recovery, the job abends with a code of 999.

Once the cause of the error is eliminated, the recovery job can be run.

Correcting errors

Use the following strategies to correct errors:

- If the error is an I/O error on either the RESTART or CCARF recovery streams, use IEBGENER or a similar sequential copy utility to copy the affected stream to another volume. Then rerun recovery using the copy.

- If the problem cannot be corrected, but involves only one file, remove the file from the recovery run and recover it separately.

Automated secondary recovery: reuse JCL

You do not need to alter your JCL when you run RESTART recovery multiple times. Occasionally, when RESTART processing is underway, it might be interrupted:

- During ROLL BACK processing

- During ROLL FORWARD processing

If the interruption occurs:

- During ROLL BACK processing, you resubmit the job.

- During ROLL FORWARD processing, you resubmit the job. Make no changes to the RESTART or CHKPOINT data sets.

Model 204 determines whether this is a primary or secondary recovery run and uses the appropriate file: RESTART or CHKPOINT for ROLL BACK recovery.

Note special requirement for z/VSE: To take advantage of the automation of secondary recovery, you must include

CHKPNTDin your primary recovery job.CHKPNTDuses the same data set name as CHKPNT, but specifiesDArather thanSD. Specify CCAJRNL, CCARF and RESTART as always. For example:// DLBL CHKPNTD,MODEL204.CHKPOINT.FILE.2,0,DA // EXTENT SYS023,SYSWK2,,,2000,13000 // DLBL CHKPNT,MODEL204.CHKPOINT.FILE.2,0,SD // EXTENT SYS023,SYSWK2,,,2000,13000 // ASSGN SYS023,DISK,VOL=SYSWK3,SHR

If you do not include

CHKPNTD, you must run secondary recovery.

CHKPNTD data set under z/VSE

When a CHKPNTD data set is defined to take advantage of the automation of secondary recovery, Model 204 can verify whether or not the CHKPNT data set is large enough for ROLL FORWARD processing.

If the CHKPNT data set is too small, Model 204 issues the following error message and terminates before any actual recovery processing begins:

M204.2605: CHKPOINT TOO SMALL FOR ROLL FORWARD - number1 BLOCKS REQUIRED; number2 FOUND

Sizing the checkpoint data sets correctly

For z/OS sites, Model 204 verifies that the new CHKPOINT data set is large enough to contain all the information that needs to be written to the CHKPOINT file during ROLL FORWARD processing. If the new CHKPOINT data set is too small, Model 204 issues the following error message and terminates before any actual recovery processing begins:

M204.2605: CHKPOINT TOO SMALL FOR ROLL FORWARD - number1 BLOCKS REQUIRED; number2 FOUND

The CHKPOINT data set must also be a single-volume data set due to BSAM limitations regarding read-backwards.

If you use sub-transaction checkpoints, as well as transaction checkpoints, define the CHKPNTS data set to match the CHKPOINT data set.

Rerunning RESTART recovery after a successful recovery

If, for any reason, you want to force a rerun of your successful recovery job, you must define a new CHKPOINT data set. If you rerun your recovery job without a new CHKPOINT data set, recovery will be bypassed and the following message is displayed:

M204.0143: NO FILES CHANGED AFTER LAST CP, RESTART BYPASSED

ROLL BACK processing

The ROLL BACK process ends with a checkpoint.

When ROLL BACK processing is completed, the following messages are displayed:

M204.0158: END OF ROLLBACK M204.0843: CHECKPOINT COMPLETED ON yy.ddd hh:mmss.th

CHKPOINT data set required

Changing the point at which the checkpoint is taken has the following impact. The CHKPOINT data set is required for any RESTART recovery processing, because the ROLL BACK facility needs the CHKPOINT data set to write the end-of-processing ROLL BACK checkpoint.

If you do not define a CHKPOINT data set, the following message is issued:

M204.1300: RESTART COMMAND REQUIRES CHECKPOINT LOGGING - RUN TERMINATED

Operational changes to ROLL FORWARD processing

Tracking the application of updates

As the CCARF data set is processed by ROLL FORWARD, the following messages are printed each time a new journal block is read in which the hour in the date-time stamp at the beginning of the journal block is one hour (or more) greater than the hour in the last M204.1992 message printed. For example,

M204.1992: RECOVERY: PROCESSING ROLL FORWARD BLOCK# 0000001B 01.235 16:31:32.43 M204.1992: RECOVERY: PROCESSING ROLL FORWARD BLOCK# 00001C57 01.235 17:00:23.34 M204.1992: RECOVERY: PROCESSING ROLL FORWARD BLOCK# 00002F05 01.235 18:00:12.38

These messages are intended to provide an indication that ROLL FORWARD processing is progressing and help you estimate when recovery will complete. The ROLL FORWARD BLOCK# is the sequential number (from the beginning of CCARF) of the journal block read at that point during ROLL FORWARD processing.

ROLL FORWARD processing can be run separately

ROLL FORWARD processing can be invoked subsequent to a successful ROLL BACK process in a separate job or job step by issuing the following command:

RESTART ROLL FORWARD

The following message does not indicate that the full ROLL BACK processing is occurring; this message indicates that the correct starting point for ROLL FORWARD processing is being located.

M204.2512: ROLL BACK WILL USE THE FOLLOWING dataset: RESTART | CHKPOINT

If Model 204 detects that a successful ROLL BACK process did not occur, it forces a full ROLL BACK process and all the standard ROLL BACK messages are displayed.

Setting RCVOPT when running ROLL BACK and ROLL FORWARD separately

When running ROLL BACK-only, Rocket Software recommends setting the ,var>RCVOPT parameter to 0. If you then run a subsequent ROLL FORWARD-only step, the ROLL BACK processing will be suppressed. If you do run ROLL BACK-only with RCVOPT set including 1, do not set CPMAX TO 0 OR 1.

- If you run ROLL BACK-only with an RCVOPT setting that does include X'01' and the CPMAX parameter is set to 0 or 1, a subsequent ROLL FORWARD-only request must process ROLL BACK again to find the correct checkpoint for ROLL FORWARD processing to locate and apply the ROLL FORWARD updates.

- If in your ROLL BACK-only step RCVOPT included X'01' and the CPMAX parameter was set greater than one, you can suppress the ROLL BACK processing on a ROLL FORWARD-only step by supplying the ROLL BACK TO checkpoint ID information that was previously rolled back to. Issue a RESTART command, as shown in the following syntax:

RESTART ROLL BACK TO yy.ddd hh:mm:ss. ROLL FORWARD

ROLL FORWARD-only processing uses this information to locate the correct ROLL FORWARD starting point in the CCARF data set.

If you do not provide the checkpoint ID information, then the ROLL BACK processing is automatically triggered to locate the correct starting point for ROLL FORWARD processing.

- Under the previously noted conditions, if you do not provide the checkpoint ID information, then the ROLL BACK processing is automatically triggered to locate the correct starting point for ROLL FORWARD processing.

- Also, if running a ROLL BACK-only process, the CCARF file definition is no longer required.

ROLL FORWARD processing, in any context, requires the RCVOPT X'01' bit. CPMAX should be set to a value that meets your site's need. Your current RESTART configuration should reflect whether you take the CPMAX default value or set some other value.

Finding checkpoint in journal

Locating the checkpoint record rolled back to in ROLL BACK processing is done using the NOTE/POINT facility provided for BSAM data sets. The NOTE/POINT facility allows direct access to a single record in a file of any size. This eliminates the potentially long delay currently encountered between the end of ROLL BACK processing and the beginning of ROLL FORWARD processing. The NOTE/POINT facility handles all forms of CCARF data sets, including ring streams, parallel streams, and single sequential data sets.

Note special requirement for z/VSE: IBM restricts the NOTE/POINT facility for sequential data files under z/VSE.

RESTART recovery requirements - CCATEMP, NFILES, NDIR

Checkpoint information that is logged can include data for RESTART recovery that must be relocated back to the same CCATEMP pages and using the same internal file numbers during RESTART recovery that were used during the Online run. Therefore, in a RESTART job or step the CCATEMP size, NDIR and NFILES must be equal to or greater than the values after initialization of the job being recovered.

The RESTART value for:

- NFILES may need increase by as much as two greater than set in the Online CCAIN, if CCAGRP and CCASYS were opened by the Online.

- NDIR may need to increase by as much as three to accommodate CCATEMP, CCAGRP, and CCASYS, if they were opened by the Online.

- CCATEMP in RESTART must be at least as large as that in the job that is being recovered. Verify that the CCATEMP data set allocation or TEMPPAGE in RESTART meets this requirement.

If not properly set for RESTART, you will receive the following error message that specifies the required value and initialization is terminated. Reset the parameter to the value indicated in the message and resubmit the RESTART job.

M204.0144 parameter=value BUT MUST BE AT LEAST value (reason)

Recovering deferred update mode files

Under the z/OS and z/VM operating systems, files that were in deferred update mode at the time of a crash can be recovered using the ROLL BACK facility either alone or in conjunction with the ROLL FORWARD facility. Checkpoint markers are written on every open, deferred update data set.

How recovery works for deferred update files

For each file being recovered, ROLL BACK processing opens the file's deferred update data set(s) for input. Each deferred update data set is scanned for a marker that corresponds to the last checkpoint. The data set is then changed from input to output, so that changes made during ROLL FORWARD processing are written over changes that were backed out. At the end of recovery, files in deferred update mode are not closed. Changes made during the new run are added to the old deferred update data set.

If ROLL BACK processing cannot open the file's deferred update data set, or if the deferred data set or data sets do not contain the correct checkpoint marker, the file is not recovered.

Deferred update recovery not supported under z/VSE

Support for recovery of files in deferred update mode is not implemented under the z/VSE operating system.

Recovering dynamically allocated data sets

The RESTART command, by default, dynamically reallocates and recovers any Model 204 files or deferred update data sets that were dynamically allocated in the run that is being recovered.

How recovery works for dynamically allocated data sets

Dynamically allocated data sets normally are closed and freed after recovery processing. Therefore, it is necessary to reallocate the data sets after recovery completes. However, if a dynamically allocated file were opened in deferred update mode in the run that is being recovered, the file in deferred update mode remains allocated and open after recovery completes. Any deferred update data sets that were dynamically allocated also remain allocated and open. This ensures that any further deferred updates after recovery do not overwrite previous records in the deferred update data set.

Bypassing dynamic file allocation

If RESTART recovery processing determines that no files need to be recovered, dynamic allocation is bypassed, which saves CPU and wall clock time.

Media recovery

You can perform media recovery using the REGENERATE or REGENERATE ONEPASS command in a Batch204 job or a single-user Online.

Media recovery procedures are useful for restoring a file when:

- Hardware error occurs on the storage media

- Data set is accidentally deleted

- ROLL FORWARD processing fails for an individual file

Media recovery can automatically restore from a dump of the file, or can allow the restore to be done using any desired technique prior to running media recovery.

Media recovery is initiated by the REGENERATE or REGENERATE ONEPASS command in the CCAIN input stream. Processing reapplies updates made to single or multiple files since the time of a backup of those files.

File regeneration cannot occur across discontinuities or across Model 204 release boundaries.

Phases of a media recovery run

A media recovery run consists of the following phases:

- Phase 1 — The REGENERATE or REGENERATE ONEPASS command is parsed for syntax errors. If an error is encountered, the command is rejected and no processing is performed.

- Phase 2 — All files for which dump files are specified in the REGENERATE or REGENERATE ONEPASS command are restored. This step is performed only if media recovery is performing the restore.

- Phase 3 — The input journal file is processed in one or two passes.

Input journal processing for the REGENERATE command

The last phase of media recovery is performed in two passes as follows:

- Pass 1 — The input journal is scanned for the starting and stopping point of all participating files:

- If any errors are detected, processing terminates for the files affected by the error.

- If any files remain to be processed after Pass 1 is completed, the input journal is closed and reopened.

- If the REGENERATE command BEFORE clause is used, the last complete update or checkpoint taken before the time specified is determined.

- Pass 2 — The input journal is reread and updates that were originally made between the starting and stopping points are reapplied to the files.

Input journal processing for the REGENERATE ONEPASS command

As the journal records are read, the updates are applied:

- The input journal is scanned for the starting and stopping point of all participating files. If any errors are detected, processing terminates for the files affected by the error.

- The input journal is read and updates that were originally made between the starting and stopping points are reapplied to the files.

If ONEPASS is specified for a REGENERATE command and the file being regenerated has been recovered within the supplied journals, the regeneration fails with the following error:

M204.2629: ONEPASS DISALLOWED ACROSS FILE RECOVERY

The file is deactivated and contains all updates as of the time of the recovery. If regeneration is desired across a recovery, two passes of the journal are required.

REGENERATE processing has no back out capability. In ONEPASS, each update is applied as read from the journal. This causes a problem if a file is rolled back. The way two passes works is that it reads the entire journal and then sets starting and stopping points for the second pass. This way the updates that are unneeded can be omitted as REGENERATE processing reads past them.

Running REGENERATE with the IGNORE argument

Using the IGNORE argument with a REGENERATE command bypasses the file parameter list (FPL) update timestamps. This allows you to run REGENERATE processing with one CCAGEN at a time, instead of requiring a single concatenated journal. If necessary, you can run single CCAGEN data sets one at a time in multiple REGENERATE steps.

For example, the first REGENERATE command may be as follows and provide the first journal:

REGENERATE FILE abc FROM dumpabc

A subsequent run may provide the second journal and specify:

REGENERATE FILE abc IGNORE

The second run picks up from where the first REGENERATE processing ended and applies the second journal updates.

Previously, you had to concatenate all journals into one data set or specify using a concatenated CCAGEN DD statement.

Attention: If you omit a journal, it is not reported. Therefore, use the new option with care.

The IGNORE keyword is not valid with the FROM option.

Number of files that can be regenerated

The number of files that can be regenerated in a single run depends on the settings of the following parameters on User 0's parameter line:

- NDCBS, the number of data sets associated with the files being regenerated.

- NDIR, the number of file directory entries, must be at least as large as the number of files to be regenerated in a single media recovery run.

- NFILES, the number of file save areas to allocate, must be at least as large as the number of files to be regenerated in a single media recovery run.

Additional files might be needed if the run performs functions in addition to the REGENERATE operation.

Using the REGENERATE or the REGENERATE ONEPASS command

Choosing to use REGENERATE or REGENERATE ONEPASS

If you must regenerate across a recovery process, two passes of the journal are required. You must build REGENERATE commands. In this case, a REGENERATE ONEPASS command will terminate with an error.

Specifying a starting point

The starting point for reapplying updates to a file is the first start of an update unit with a time greater than the last updated time of the file being processed.

- You can request a specific or nonspecific stopping point as an option of the REGENERATE command.

- You can request only a specific stopping point as an option of the REGENERATE ONEPASS command.

Specifying a stopping point

To request a specific stopping point, use the TO clause of the REGENERATE or REGENERATE ONEPASS command. If you do not indicate a stopping point, all updates in the input journal are applied.

Not specifying a stopping point

To request a nonspecific stopping point, use the BEFORE clause of the REGENERATE command. The BEFORE clause uses the last complete update or checkpoint that occurred before the specified time as the stopping point for reapplying file updates. You cannot use a BEFORE clause with a REGENERATE ONEPASS command.

Using a FROM clause

When a REGENERATE or REGENERATE ONEPASS command is issued without a FROM clause, the command assumes that the file was previously restored. The following validity checking occurs:

- Physical consistency — If a file is marked physically inconsistent, processing is discontinued.

- Release boundary — If a file was created prior to Model 204, Release 9.0, processing is discontinued.

The following error messages pertain to the REGENERATE command FROM option:

M204.1711: 'FROM' CLAUSE REQUIRED FOR FILES CREATED PRE R9 M204.1426: INVALID 'FROM 'CLAUSE M204.1708: REGENERATE DID NOT PERFORM RESTORE

Required data sets for media recovery

The STEPLIB, CCAIN, and CCAPRINT data sets are required for media recovery.

DB1 through DBn, where n is a number corresponding to the last file to be recovered, define the file data sets to be recovered.

If an INCREASE DATASETS command is issued during the recovered time interval, data sets added to the file by the INCREASE also must be specified.

DUMPDB1 through DUMPDBn, where n is a number corresponding to the last file to be recovered, define the dumped versions of the file data sets to be recovered.

The name specified for the file to be regenerated must match the name of the dump file.

If the run completes successfully, Model 204 displays the message:

*** M204.1437: REGENERATE IS NOW COMPLETE

Possible error and database inconsistency conditions are described in Media recovery.

Example: z/OS media recovery run

This example shows the z/OS JCL required to invoke a media recovery run:

//REGEN JOB REGEN,MSGLEVEL=(1,1) //M204 EXEC PGM=BATCH204,PARM='SYSOPT=136' //STEPLIB DD DSN=LOCAL.M204.LOADLIB,DISP=SHR //CCAJRNL DD DSN=REGEN.CCAJRNL,DISP=(NEW,CATLG,CATLG), // VOL=SER=WORK,UNIT=DISK,SPACE=(TRK,(5)) //CCAGEN DD DSN=LOCAL.M204.JOURNAL.840228,DISP=SHR // DD DSN=LOCAL.M204.JOURNAL.840229,DISP=SHR // DD DSN=LOCAL.M204.MERGED.JOURNAL.840302,DISP=SHR //CCAAUDIT DD SYSOUT=A //CCAPRINT DD SYSOUT=A //CCASNAP DD SYSOUT=A //CCATEMP DD DISP=NEW,UNIT=WORK,SPACE //CCASTAT DD DSN=LOCAL.M204.CCASTAT,DISP=SHR //CCAGRP DD DSN=LOCAL.M204.CCAGRP,DISP=SHR //TAPE2 DD DSN=M204.DB1.DEFERF,DISP=SHR //TAPE3 DD DSN=M204.DB1.DEFERV,DISP=SHR //DB1 DD DSN=LOCAL.M204.DB1,DISP=SHR //DB2 DD DSN=LOCAL.M204.DB2,DISP=SHR //DB3 DD DSN=LOCAL.M204.DB3,DISP=SHR //DB4 DD DSN=LOCAL.M204.DB4,DISP=SHR //DUMPDB1 DD DSN=DUMP.DB1,DISP=SHR //DUMPDB2 DD DSN=DUMP.DB2,DISP=SHR //DUMPDB3 DD DSN=DUMP.DB3,DISP=SHR //CCAIN DD * NFILES=10,NDIR=10,LAUDIT=1,SPCORE=10000 * * OPEN THE FILE IN DEFERRED UPDATE MODE * BEFORE THE REGENERATE COMMAND * OPEN DB1,TAPE2,TAPE3 REGENERATE FILE DB1 FROM DUMPDB1 FILE DB2 FROM DUMPDB2 TO LAST CHECKPOINT FILE DB3 FROM DUMPDB3 TO UPDATE 3 OF 83.216 06:28:42:98 FILE DB4 TO CHECKPOINT 83.216 01:05:22:99 END EOJ

Media recovery NonStop/204

Media recovery restores an individual Model 204 file, not the entire database as discussed in ROLL BACK facility, from a backed up version of the file.

You can use third-party software to backup Model 204 files. Third-party backups run independently of Model 204, while the Online remains running and responsive to non-updating activity. This provides a greater ability to run Model 204 in a nonstop 24*7 mode.

The following describes the functionality and facilities that allow you to develop, integrate, and manage your third-party software backup methodology.

Managing third-party backups