Performance monitoring and tuning: Difference between revisions

m →Loop: add <var>s |

m Minor clarification of MAXSPINS |

||

| Line 1,256: | Line 1,256: | ||

You can change the number of spins that can be taken when MPLOCK is not available before going into wait by adjusting the setting of the <var>MAXSPINS</var> parameter. </p> | You can change the number of spins that can be taken when MPLOCK is not available before going into wait by adjusting the setting of the <var>MAXSPINS</var> parameter. </p> | ||

<p> | <p> | ||

Rocket Software recommends that you test the setting of the MAXSPINS parameter at your site. Increase the value of <var>MAXSPINS</var>, watching for a drop in the values of the LKWAIT and LKPOST statistics. When the values of | Rocket Software recommends that you test the setting of the MAXSPINS parameter at your site. Increase the value of <var>MAXSPINS</var>, watching for a drop in the values of the LKWAIT and LKPOST statistics. When the values of those statistics stop dropping, that's an indication that you've found a good setting for the MAXSPINS parameter.</p></li> | ||

<li>STWAIT and STPOST measure the number of WAIT and POST operations associated with MP subtask switching, and are, therefore, indicators of one component of MP overhead.</li> | <li>STWAIT and STPOST measure the number of WAIT and POST operations associated with MP subtask switching, and are, therefore, indicators of one component of MP overhead.</li> | ||

</ul> | </ul> | ||

====Fast logical page reads==== | ====Fast logical page reads==== | ||

<p> | <p> | ||

Latest revision as of 19:23, 2 February 2022

Overview

This page discusses several methods of enhancing Model 204 system performance.

The last two sections of this topic discuss MP/204 (multiprocessing), a Rocket Software product available to z/OS users with multiple processor hardware configurations. Multiprocessing significantly increases throughput for most Model 204 applications.

Optimizing operating system resources

Because Model 204 runs as a multiuser, interactive task, take care at the operating system level (z/OS, z/VM, z/VSE) to ensure that Model 204 has adequate access to system resources.

The resources most critical to Model 204 performance are CPU and real memory or central storage. Because Model 204 supports many users from one virtual storage space, treat it as a high priority, system-level task. Address the following issues at your installation.

z/OS

- Run Model 204 as a nonswappable address space. This requires adding the following PPT (Program Properties Table) entry to the active SCHEDnn member of SYS1.PARMLIB:

PPT PGMNAME(ONLINE) /* Model 204 name */ NOSWAP /* nonswappable */

Note: Do not specify

KEY(0). During initialization, Model 204 processing checks whether it is running inKEY(0). If the key is 0 and the region is running APF-authorized, the key is switched toKEY(8)(user key), and a message is issued to the audit trail. If the region is not running APF-authorized, a message is issued to the audit trail, and the run is terminated. - Run Model 204 in a performance group with a high, fixed dispatching priority rather than mean-time-to-wait or rotate (supported only in z/OS releases prior to z/OS 2.2).

- Limit the paging rate of the Model 204 address space to no more than one to two pages per second through storage isolation or working-set-size protection. These controls are provided through the System Resource Manager (SRM) component of z/OS via the parameters PWSS and PPGRT.

z/VM

Issue the following commands for the Model 204 service machine:

For z/VM/ESA:

SET QUICKDSP service_machine_name ON SET RESERVED service_machine_name nnn

where nnn = 70-80% of STORINIT.

z/VSE

Run Model 204 in a high priority partition.

Ensure that sufficient real memory is available to support the partition size defined and to guarantee a paging rate not to exceed one to two pages per second.

Timer PC

Timer PC is a Cross-Memory Services facility option that provides a problem-program interface to the job step CPU accounting information maintained by SMF. It is functionally equivalent to TTIMER/STIMER processing, but it consumes less CPU time. The saving is most visible in environments where the user switching rate is very high: for example, when a buffer pool is heavily loaded and every page request will include a wait.

Timer PC requires only the installation of M204XSVC, the Cross-Memory SVC. The Cross-Memory Services facility, which establishes the PC (program call) environment during Model 204 initialization, is used by Timer PC to avoid SVC interruptions.

To enable Timer PC, specify the XMEMOPT and XMEMSVC parameters on User 0's parameter line.

- To enable Timer PC, the XMEMOPT setting must include the X'01' bit.

- To disable Timer PC, the XMEMOPT setting must not include the X'01' bit.

Note: As of version 7.4 of Model 204, Timer PC is automatically disabled for systems that support the ECTG (Extract CPU Time Grande) instruction, which is more efficient than the Timer PC call. In this case, Model 204:

- Resets the XMEMOPT parameter to exclude the X'01' bit.

- If the XMEMOPT X'02' bit (IOS Branch Entry) is off, forces on the X'04' bit to insure that M204SVC is used for initialization.

EXCPVR

Under z/OS systems that are not XA or ESA, the EXCPVR feature provides CPU savings when performing disk and server I/O. EXCPVR is not supported for z/OS in 64-bit mode.

Using EXCPVR, Model 204 translates its own CCW data addresses from virtual to real.

Under z/OS, disable EXCPVR (EXCPVR=0) in favor of IOS Branch Entry. EXCPVR and IOS Branch Entry are mutually exclusive.

Under z/OS, ECKD channel support (discussed in ECKD channel program support) is not available for use with EXCPVR. Do not use 9345 DASD with EXCPVR because of the potential for poor performance on writes.

The following considerations apply:

- To enable EXCPVR:

- Install the Model 204 page fix/start I/O appendage in SYS1.SVCLIB (see the appropriate Rocket Model 204 installation page).

- Authorize the version of Model 204 that uses EXCPVR under the Authorized Program Facility (APF).

Authorization procedures differ among VS systems (see the appropriate Rocket Model 204 installation page for systems that support EXCPVR).

- To use EXCPVR:

Set the EXCPVR parameter to the last two characters of the name of the PGFX/SIO appendage installed in SYS1.SVCLIB.

The suggested value of the EXCPVR parameter is

C 'W2', but you can use other characters betweenC 'WA'andC 'Z9'. The default is binary zero (EXCPVR not used).You can set EXCPVR in the EXEC statement or on User 0's parameter line.

IOS Branch Entry

IOS Branch Entry is a Cross-Memory Services facility option that provides high-performance I/O for Model 204 running under z/OS.

IOS Branch Entry provides:

- Fast path to the I/O Supervisor (IOS)

- Reduced instruction path length for each I/O operation by translating a pre-formatted channel program

- Page fixing for required storage areas

- Direct branching to IOS to initiate I/O activity

- Placement of all database I/O control blocks, hash cells, page fix lists, and buffers above the 16-megabyte line, significantly reducing the risk of control block overflow into CSA

- Placement of NUMBUFG buffer pool buffers above the bar (ATB).

IOS Branch Entry replaces the EXCP driver and overrides the EXCPVR option if both EXCPVR and IOS Branch Entry are requested.

Activating IOS Branch Entry

To enable IOS Branch Entry, perform the following steps:

- Ensure that M204XSVC, the module that provides Model 204 Cross-Memory Services, is linked into the Model 204 nucleus. This is the default as of Model 204 7.5. Otherwise, install M204XSVC as an SVC.

- Authorize Model 204 under APF. This is the default as of Model 204 7.5.

- Specify the XMEMOPT parameter (include the X'02' bit) on the User 0 input line. If M204XSVC is installed as an SVC, also specify XMEMSVC on the User 0 input line.

When IOS Branch Entry is enabled, control blocks, hash cells, and page fix lists are allocated above the 16-megabyte line, and NUMBUFG buffer pool buffers are built above the bar.

Saving storage when using IOS Branch Entry

During initialization Model 204 calculates a default number of Disk Buffer I/O control blocks (DBIDs). If you are using IOS Branch Entry, this default number may be unnecessarily high. Use the MAXSIMIO parameter to allocate a smaller number of DBIDs.

Model 204 uses the following formula to calculate, as a default, the maximum number of DBIDs that will ever be needed:

32 * (NSUBTKS + NSERVS)

Using a lower MAXSIMIO value when you are also using IOS Branch Entry limits the number of DBIDs allocated and results in storage savings. If you are not using IOS Branch Entry, an explicit MAXSIMIO setting is ignored and the value calculated by Model 204 is used instead.

Model 204 version 7.6 consumes a little more storage than previous versions when IOS Branch Entry is used. The maximum amount of CSA storage that can be allocated for Model 204 version 7.6 is limited to MAXSIMIO*296 bytes. If you do not use MAXSIMIO, calculate the maximum CSA storage using the number of servers plus the number of PSTs.

High Performance FICON (zHPF)

The IBM High Performance FICON (zHPF) interface allows faster I/O by limiting the number of interactions between the channel and the device, which can especially improve I/O performance for geographically remote disks. Model 204 versions 7.6 and later support zHPF on z/OS.

Hardware and software requirements for zHPF

- CPU – z10 and higher

- z/OS – 2.01

- Disk subsystem – contact your IT department to verify that the disk subsystem has the zHPF feature available and activated.

- zHPF is not supported on z/CMS or z/VSE.

- Model 204 version 7.6 or later is required in order to use zHPF.

Using zHPF

If zHPF is supported on your IBM z/OS system, you can activate it on the system, enable zHPF usage in Model 204, and display zHPF capabilities for each data set in a file.

- To enable zHPF usage in Model 204, set the SYSOPT2 parameter to X'10'.

- To display zHPF capabilities, use the ZHPF command to check zHPF availability for data sets in the file.

- To dynamically turn the z/OS zHPF feature on or off, use the z/OS command:

SETIOS ZPHF=YES|NO

Note: If zHPF was in use, close Model 204 files before turning off zHPF.

EXCP and IOS Branch Entry support

EXCP and IOS Branch Entry drivers are supported with zHPF, but EXCPVR is not supported.

Model 204 versions 7.6 and later implement transport mode I/O on a file basis, so database files residing on disks with and without zHPF capability can be processed at the same time.

Versions 7.6 and later also consume a little more common storage (CSA) than previous versions when IOS Branch Entry is used.

Disk buffer monitor statistics and parameters

DKBM efficiency

The efficiency of the buffering monitor in keeping needed disk pages in memory is measured by the DKPR, DKRD, and DKRR statistics.

DKRD and DKPR statistics

DKRD maintains a count of the number of real page reads that occur from Model 204 files. DKPR measures the number of times a user requests a page to be opened.

A real read, which increments DKRD, is required only if the page requested is not already contained in a buffer. Pages cannot be kept open across any function that causes a real-time break, such as terminal I/O.

If the value of DKRD is close to the value of DKPR and many pages are bumped out of buffers during user waits, increase the number of buffers in the run.

DKRR statistic

DKRR is a more accurate measure of the efficiency of the buffering monitor in retaining previously used disk pages in memory.

DKRR counts the number of recently referenced pages that must be reread from disk. A list of each user's recently used pages is kept. If a new page reference is made and the page is already in the table, DKRR is incremented.

New pages are added at the end of the table. If a page already appears in the table, the older entry is deleted and the table is maintained in page-reference order. If a new entry does not fit in the table, the oldest entry is deleted. A new reference to the deleted page does not increment DKRR.

DKRR is controlled by:

| Parameter | Means | Usage |

|---|---|---|

| LRUPG | Number of page IDs retained for each user for matching. | You must set LRUPG in the parameter statement for the operating system, with a value between 0 and 255. The value 0 indicates that no DKRR statistics are kept. |

| LRUTIM | Controls the aging of the IDs in the table and is set on User 0's parameter line. | If a user does not make a disk reference within LRUTIM milliseconds of the previous disk reference, all the disk page IDs previously remembered are considered obsolete and deleted. |

Adequacy of existing buffers

Adequacy of existing buffers is monitored by the FBWT, DKSFBS, DKSAWx, DKSDIR, DKSDIRT, DKSKIP, DKSRR, DKSTBLx, DKSWRP, and DKSWRPT statistics:

- DKSFBS records how many times a scan of the queue of free buffers is required when the oldest free buffer is not immediately available. The oldest buffer can be either in the process of being written out, or waiting to be written because its contents have been modified.

- DKSRR measures the number of times an expected buffer page requires I/O to be read.

Buffers currently open are not in the reuse queue. To avoid the need for real I/O, requests for pages found in LRU (reuse) queue buffers cause the buffers to be removed from the queue. The oldest buffer that has not been updated (and does not need to be written) is the first to be reused.

Model 204 remembers modified buffers that were skipped over in a buffer scan. In anticipation of new buffer requests, some of the remembered buffers might be written out so that the buffers can be reused:

- DKSKIP is the highwater mark of the number of buffers skipped while searching for one that could be reused immediately.

- DKSKIPT is the total number of skipped buffers for the run.

Anticipatory writes

An anticipatory write is the write of a closed, modified page from the buffer pool back to CCATEMP or to the file it came from in anticipation of the need for a free buffer. Closed pages in the buffer pool are arranged in the LRU (least recently used) queue from least recently referenced (bottom of queue) to most recently referenced (top of queue). When a page is closed, meaning no user is referencing it, the buffer containing that page is usually placed at the top of the LRU queue. Over time, buffers, and the pages they contain, migrate to the bottom of the LRU queue.

Unmodified pages will not be written since no changes have occurred. However, the buffer occupied by that page is immediately available for reuse when the buffer reaches the bottom of the LRU queue. The same is true for modified pages except that they must first be written before the occupied buffer can be reused. Pages that are on the LRU queue and are subsequently referenced are removed from the LRU queue and are not available for reuse until again closed by all users.

Understanding the anticipatory write window

The anticipatory write window is a collection of buffers at the bottom of the LRU queue. For buffers allocated below the bar, the window starts at LDKBMWND buffers from the bottom of the queue. For buffers allocated above the bar, the window starts at LDKBMWNG buffers from the bottom of the queue.

If you use MP/204, you may have multiple LRU queues. The number of queues is set with the NLRUQ parameter.

When a modified page migrates into an anticipatory write window, the write for the page is started. The larger the value of LDKBMWND or LDKBMWNG, the sooner that anticipatory write starts.

The purpose of an anticipatory write window is to provide a mechanism that guarantees that the buffers at the bottom of the reuse queue are immediately available for reuse. Buffers containing unmodified pages are immediately available. Buffers containing modified pages, however, are not immediately available unless the write for the page has completed.

Monitoring statistics for LDKBMWND

Setting LDKBMWND or LDKBMWNG to a high value (say more than half of NUMBUF or NUMBUFG, respectively) may cause an undesirable increase in DKWR. Setting LDKBMWND or LDKBMWNG to a low value (say their defaults) in a heavy update system, may cause users to wait for buffers to become reusable and cause the FBWT statistic to increase.

Start with LDKBMWND or LDKBMWNG equal to .25 * NUMBUF or .25 * NUMBUFG, respectively, and monitor the following statistics:

- DKSAWW

- DKSKIP

- DKSKIPT

- DKWR

- DKWRL

- FBWT

DKSAWx statistics measure the number of anticipatory writes done under the following conditions:

| Statistic | When a buffer was... |

|---|---|

| DKSAWB | Written from the buffer pool. The page in the buffer is then deleted and no longer available without a re-read. Usually a small statistic and typically only incremented by the INITIALIZE command and in a few other, rare events. This is not an anticipatory write. |

| DKSAWW | The number of anticipatory writes of buffers that entered the anticipatory write window. |

FBWT measures the number of times a requested buffer could not be obtained until a write was complete. If this number is high, it may be appropriate to increase the value of LDKBMWND or LDKBMWNG.

In addition:

- DKSTBLA, DKSTBLB, DKSTBLC, DKSTBLD, and DKSTBLF counters measure how real I/O to read pages of database files breaks down by table.

- DKSWRP and DKSWRPT measure the highwater mark and total number of writes in process within a monitoring window, and DKSDIR and DKSDIRT measure the highwater mark and total number of modified buffers within the window. The values of these four statistics are controlled by the LDKBMW parameter, which specifies the number of buffers examined by the Disk Buffer Monitor at each find-buffer operation. A system manager can reset LDKBMW during a run.

The monitoring window starts at the bottom of the reuse queue, and extends upward for the specified number of buffers. If LDKBMW is nonzero, every call to find a buffer causes Model 204 to examine the window.

Note: Because the overhead associated with this parameter is significant, Rocket Software recommends that it remain at its default value of zero, unless directed otherwise by a Rocket Software Technical Support engineer.

Disk update file statistics

The Model 204 disk update file statistics are displayed when the last user closes the file or when partial file statistics are gathered.

| Statistic | Records elapsed time in milliseconds... | Comments |

|---|---|---|

| DKUPTIME | To write a file's pages to disk and to mark it "physically consistent" on disk. | Includes all time spent writing pages, even if the disk update process was interrupted, as indicated by the following message:

M204.0440: FILE filename DISK UPDATE ABORTED |

| PNDGTIME | File waits to be written to disk, after the last update unit completed. | The measured interval begins at the completion of the last update unit for the file, and ends when a user (or possibly the CHKPPST) begins to write the file's modified pages to disk.

PNDGTIME statistic is accumulated only when the DKUPDTWT parameter is nonzero. Model 204 does not display or audit statistics with a value of zero. |

| UPDTTIME | Open file is part of at least one update unit. |

Page fixing

Use page fixing to reduce paging traffic in z/OS systems by using the z/OS PGFIX macro to fix heavily used sections of Model 204 in memory. You must authorize Model 204 under the VS Authorized Program Facility (APF) if any pages are to be fixed.

You can fix the following areas in memory by specifying a summed setting on the PAGEFIX parameter on User 0's parameter line:

- KOMM

- Scheduler work areas

- Server swapping work areas

- Statistics area

- Buffer hash field

- Buffer control blocks

- Buffers

- Journal control blocks and buffers

- Physical extent blocks for user files

- Physical extent blocks for CCATEMP

- LPM and DSL for user files

- LPM and DSL for CCATEMP

- DCB areas

- File save areas

- User/file mode table

- Resource enqueuing table

- Record enqueuing table

- Server areas

- Record descriptions

- Performance subtask work area

To prevent the operating system from paging in buffers only to have the data replaced, memory pages containing Model 204 file buffers are released and reallocated before disk read operations. Areas can vary in size, depending on the settings of various parameters such as NUSERS, NFILES, and MAXBUF.

Fixing too much in real memory might increase the operating system paging rate and adversely affect the performance of other z/OS tasks.

The use of this parameter is generally not recommended if the paging rate of the Model 204 address space is controlled through working-set size protection (discussed in Optimizing operating system resources).

1MB virtual pages

Model 204 supports 1MB virtual pages in this environment:

- CPU: on model z/10 and later

- O/S: z/OS 1.9 and later

1MB pages can be used only with storage above the bar (2GB).

Use of 1MB pages provides a performance advantage because the operating system can now keep more real addresses in memory and minimize the translation of virtual to real addresses.

To use 1MB pages, set the Model 204 parameter ZPAGEOPT to indicate which areas should be allocated using 1MB pages.

The size of the virtual storage area with 1MB pages for an LPAR is defined at IPL time by the z/OS LFAREA (Large Frame AREA) parameter, set in SYS1.PARMLIB(IEASYSnn). This storage is not pageable.

Please consult your systems programmer for both of these:

- Check on the setting of the LFAREA parameter parameter.

- Confirm that your operating system has all the latest maintenance from IBM regarding 1MB pages.

The LFAREA is dynamic and shared by all regions. When z/OS experiences a shortage of 4K pages, it steals 1MB pages and subdivides them into 4K pages. If the Model 204 request for virtual storage with 1MB pages cannot be satisfied, then regular 4K pages will be used for this purpose, without generating a warning.

When buffers are allocated with 1MB pages, Model 204 must use IOS Branch Entry (XMEMOPT parameter X'02' bit). EXCP and EXCPVR do not support buffers allocated with 1MB pages above the bar.

Parameters

The ZPAGEOPT parameter indicates which areas should be allocated using 1MB pages.

Two view-only parameters provide information on 1MB pages:

- STORZPAG: The current number of megabytes of above the bar storage allocated with 1MB pages.

- STORMXZP: The highwater mark of megabytes of above the bar storage allocated with 1MB pages during the run.

Options

The MONITOR command GSTORAGE option displays statistics regarding allocated above the bar storage.

Messages

The following messages are associated with 1MB pages and above the bar storage:

- M204.2919:HWM megabytes ATB storage allocated = %c

- M204.2925:HWM megabytes storage allocated with 1MB pages = %c

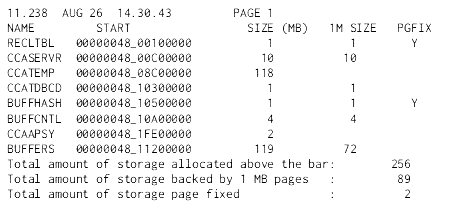

MONITOR GSTORAGE

The MONITOR GSTORAGE (monitor grande storage) option displays statistics regarding allocated storage and 1MB pages. It can be abbreviated as MONITOR GS or M GS.

MONITOR GSTORAGE uses the following format:

NAME START SIZE (MB) 1M-SIZE PGFIX

Where:

- NAME — name of the memory object.

Buffers are allocated in chunks and presented in one line using name "BUFFERS" and accumulated data for all chunks.

- START — starting address of the memory object.

For buffers it is the address of the first chunk.

- SIZE (MB) — Size in MB of the memory object.

For buffers it is the accumulated size of all chunks.

- 1M SIZE — Size in MB of memory object backed by z/OS 1MB pages.

For buffers some chunks may be backed by 1MB pages and some by 4K pages, so values in columns SIZE (MB) and 1M SIZE may be different.

- PGFIX — a letter "Y" in this column indicates the storage is page fixed.

There are three statistical messages printed below the table, indicating total amount of storage allocated above the bar, total amount of storage allocated with 1MB pages, and total amount of page fixed storage. All amounts are in MB. Data presented by MONITOR GSTORAGE represent current values.

Example

The following example shows output from a MONITOR GSTORAGE command, showing the amount of storage that could — and could not — be allocated above the bar and in 1MB pages:

Offloading Model 204 work to zIIP processors

On a z/OS system, Model 204 zIIP support (generally available in Model 204 V7.4) enables you to offload Model 204 work from regular processors to zIIP processors.

Model 204 can run a portion of workload on IBM System z Integrated Information Processor (zIIP) engines. The only unit of work that z/OS schedules to run on a zIIP engine is the enclave Service Request Block (SRB). An enclave is a collection of resources, such as address spaces, TCBs, and SRBs, that are controlled by Workload Manager (WLM) as an entity.

Model 204 creates enclave SRBs to do work on a zIIP processor. These SRBs are managed in the same way as MP/204 subtasks. Therefore, the term zIIP subtask is used to refer to Model 204 enclave SRBs.

Note: MP subtasks and zIIP subtasks may be used together. Therefore, AMPSUBS, NMPSUBS, AMPSUBZ, and NMPSUBZ may all be greater than zero. However, to avoid unnecessary overhead:

- Do not set AMPSUBS greater than the number of central processors actually available.

- Do not set AMPSUBZ greater than the number of zIIP processors actually available.

Model 204 workload eligible for zIIP offload

Components of Model 204 that are eligible for zIIP processing include:

- FIND statement for files and groups

- SORT statement, when there are more than 64 records

- APSY load

- Server swapping

- FOR loops, if they do not:

- Perform updates

- Issue READ SCREEN statements

- Issue MQ calls (for example, MQGET)

- Issue calls to mathematical functions (for example, $Arc)

- Read or write external, sequential files

Note: As of Model 204 version 7.6, improvements to the zIIP offload make most of the Model 204 code zIIP tolerant: almost all Model 204 code that can run on an MP subtask is offloaded to zIIP. Code that cannot be executed on zIIP uses the MP subtask or a maintask.

While a very limited version of this capability is built-in for all customers of 7.6 and higher, the fully expanded zIIP support is known as M204 HPO, and it requires an additional license. For more information, contact Rocket Software.

Fast/Unload zIIP processing

The Model 204 add-on, Fast/Unload, offers support for zIIP processing beginning with Model 204 version 7.6. Available only to the Fast/Unload SOUL Interface (FUSI) in version 7.6, Fast/Unload HPO (and Model 204 HPO) let FUSI requests invoke Fast/Unload code that is contained within the Model 204 load module and capable of using a zIIP processor.

As of Model 204 version 7.7, Fast/Unload is entirely linked with the Model 204 Online load module. If run with a reference to the Model 204 load library, and licensed for Fast/Unload HPO and Model 204 HPO, a Fast/Unload job can take full advantage of Model 204 zIIP processing.

Model 204 parameters for zIIP support

The following parameters, most modeled from their MP counterparts, are provided for zIIP support:

- AMPSUBZ: Specifies the number of active zIIP subtasks

- MPDELAYZ: Specifies the number of milliseconds to delay starting an additional zIIP subtask when an extra one should be started

- NMPSUBZ: Specifies the number of zIIP subtasks attached during initialization (enclave SRBs)

- SCHDOFLS: Specifies the target number of threads on the zIIP offload queue at which MP subtasks may steal from the zIIP subtask queue

- SCHDOFLZ: Specifies the target number of threads on the zIIP offload queue per active zIIP subtask

- SCHDOPT1: Allows server swapping by an MP subtask when all zIIP subtasks are busy

- ZQMAX: Limits the number of work units for execution by the zIIP subtask

Additionally, values in the XMEMOPT and SCHDOPT parameters support zIIP processing, as described below.

XMEMOPT

The X'02' bit of XMEMOPT, which is its default, must be on to enable zIIP support.

SCHDOPT

The following SCHDOPT values are related to zIIP support:

- X'08': Do server I/O on MP subtask.

- X'20': Do not let the maintask pick up zIIP workload, even if no other work is available.

- X'40': Do not let an MP subtask pick up zIIP workload, even if no other work is available.

- X'80': Allow server swapping to be offloaded to zIIP processors. If you have

SCHDOPT=X'80'set and you also use the MP/204 feature, then you might want to setSCHDOPT1=X'01'as described in the "Usage Notes" below.

The X'80' setting is only valid when CCASERVR is in memory (servers swapped into memory). If you set SCHDOPT=X'80' when CCASERVR is not in memory, the X'80' setting is reset and message 2914 is issued:

SCHDOPT INDICATION OF SERVER SWAPPING DONE BY ZIIP IS ONLY VALID WHEN CCASERVR IS IN MEMORY

Initialization then continues.

Usage Notes

Note: As of version 7.6 of Model 204, the X'08' and X'80' values are disabled (have no effect) because the behavior they invoked is automatically on in those versions.

SCHDOPT=X'08': An MP subtask will always execute a swap unit of work before a user unit of work. This could mean that every MP subtask is busy swapping.SCHDOPT=X'88': The zIIP subtask is behaving exactly as an MP subtask. Both zIIP and MP subtasks could be busy doing swapping while no work is actually being done.SCHDOPT=X'80'andSCHDOPT1=X'01': The zIIP subtask does swapping before doing any work, and the MP subtask does real user work. But if there is no user work to do, the MP subtask helps the zIIP subtask by executing a swap unit of work. That way, if the zIIP subtask gets behind on its swapping, the MP subtask can assist.

SCHDOPT1

The parameter SCHDOPT1 X'01' bit allows an MP subtask to handle server swapping if the subtask has nothing else to do.

SCHDOPT1 is useful when zIIP is handling server swapping and needs help from an MP subtask.

Note: As of version 7.6 of Model 204, the X'01' bit is disabled (has no effect) because the behavior it invoked is automatically on in those versions.

Limiting the number of work units for execution by the zIIP subtask

The ZQMAX parameter limits the number of work units that may be placed for execution by the zIIP subtask.

The default and maximum value for ZQMAX is 65535.

- If ZQMAX is set to a non-zero value:

If a work unit is pushed onto the zIIP queue and the current zIIP queue length exceeds the value of ZQMAX, then that work unit is placed on the MP stack instead. Effectively, this limits the amount of work that is done on the zIIP and prevents zIIP overload.

- If ZQMAX is set to zero:

No users will be added to the zIIP queue. However, if SCHDOPT X'80' is on and a zIIP subtask is active (

AMPSUBZ > 0), then server swapping occurs on the zIIP subtask without the overhead of pushing zIIP work units onto the zIIP queue.

Model 204 zIIP infrastructure created during initialization

If NMPSUBZ is greater than zero, that number of zIIP subtasks is created at initialization. Each zIIP subtask is an enclave SRB.

AMPSUBZ specifies the number of active zIIP subtasks, and it can be reset during the run.

Tuning the zIIP subtask activation scheduler

The SCHDOFLZ parameter specifies the target number of threads on the zIIP offload queue per active zIIP subtask. Setting this parameter makes the Model 204 zIIP subtask scheduler more responsive to instantaneous increases in load.

The default value of 2 means that if there are more than twice as many units of zIIP offloadable work waiting to be processed in a zIIP subtask as there are active subtasks, and if fewer than AMPSUBZ subtasks are currently running, another zIIP offload subtask (not to exceed AMPSUBZ) is activated. Subtasks are deactivated, but remain available, when they have no work to do. This deactivation helps minimize zIIP overhead.

The default value is recommended in most cases:

- Setting the value to 1 potentially increases throughput, but it also increases overhead, which is not recommended unless your site has plenty of spare CPU capacity.

- A value of 0 is not recommended because it will likely result in the unnecessary activation of zIIP subtasks — by the time some of them are dispatched, other zIIP subtasks will have handled all pending units of work.

- A value greater than 2 might decrease CPU overhead while reducing throughput by reducing parallelism.

If zIIPs get overloaded

In cases where there is insufficient zIIP capacity to handle the workload, non-zIIP MP subtasks can run (steal) some of the queued work to reduce the CPU load. The SCHDOFLS parameter added in version 7.7 of Model 204 lets you control the rate of such stealing from the zIIP queue.

SCHDOFLS specifies how large the zIIP offload queue can grow before an MP subtask is dispatched to perform work intended for a zIIP subtask. The higher the SCHDOFLS value the slower is the workload relief.

The SCHDOFLS default, 2, dispatches a helping MP subtask if all zIIP subtasks are active and the number of units of work on the zIIP offload queue is more than two times the number of active zIIP subtasks (AMPSUBZ).

In the event that your system's response to zIIP overload spikes is too aggressive and you are experiencing very high task activation/deactivation costs, additional version 7.7 parameters MPDELAY and MPDELAYZ may be useful. These parameters are a number of milliseconds to delay the activation of each additional MP or zIIP subtask, respectively.

Note also that under Model 204 7.7 and later, if the SCHDOPT X'20' bit is off, the maintask will also run work queued for zIIPs if the zIIP subtasks appear totally saturated. The maintask will do so only if if all zIIP subtasks are active and the zIIP queue length is SCHDOFLZ*AMPSUBZ or higher.

The MONITOR TASKS command indicates a zIIP subtask by displaying a lowercase letter z beside the subtask number. The following are zIIP system statistics: ZDEQ, SDEQ, and ZIPCPU. See zIIP statistics, below, for details.

zIIP utilization

zIIP utilization can be regulated with the IIPHONORPRIORITY parameter. The z/OS parameter IIPHONORPRIORITY, which defaults to YES, honors the existing task processing priority. This means that a zIIP engine executes workload only when all regular engines are busy.

If zIIP usage is more important to you than regular CPU usage, set IIPHONORPRIORITY=NO and include the X'20' and X'40' bits in the Model 204 SCHDOPT parameter setting.

zIIP-compatible functions

All Rocket Software supplied SOUL $functions are zIIP compatible.

User-written $functions and zIIPs

Note: This section applies only to Model 204 version 7.6 and higher.

Instead of having to indicate what code should run on zIIP, it is only necessary to indicate what Assembler code cannot run on zIIP. Customers planning to use zIIP processing must do either of these:

- Inspect any user-written $functions for non-tolerant code (code that uses an SVC instruction or system services that may use SVC, like BSAM, VTAM, task-mode z/OS macros), which must be bracketed with

SRBMODE OFFandSRBMODE ON. - Specify

MP=NO(the default) or no MP setting at all in the function table to cause $functions to run on a maintask. Any other value for MP enables $functions to run on zIIP subtasks.

It is not always obvious whether code can or cannot run on a zIIP subtask. Even if all explicit SVCs and non-task-mode system macros issued by Model 204 have been identified and bracketed, some may be issued implicitly by z/OS or other services, so user testing is important.

zIIP statistics

The MONITOR STAT command output displays the zIIP statistics described in the following table:

| Statistic | Total CPU time in milliseconds used by... |

|---|---|

| CPUTOTZE | Workload eligible for offloading to a zIIP engine |

| CPUONZIP | All zIIP engines |

| Statistic | Number of times that a unit of work was taken by... |

| MTDEQ | The MAINTASK from the MAINTASK queue |

| MTSDEQ | The MAINTASK from an MP subtask queue |

| MTZDEQ | The MAINTASK from a zIIP subtask queue |

| STDEQ | An MP subtask from an MP subtask queue |

| STZDEQ | An MP subtask from a zIIP subtask queue |

| ZTDEQ | A zIIP subtask from a zIIP subtask queue |

MTZDEQ and STZDEQ show the number of times that the maintask or an MP subtask took work that was allocated to a zIIP subtask.

The MAINTASK can take work from an MP subtask queue or a zIIP subtask queue. An MP subtask can take work from a zIIP subtask queue. A zIIP subtask can only take work from a zIIP subtask queue.

To see non-zero results for MTZDEQ or STZDEQ, you must turn off the SCHDOPT X'20' and SCHDOPT X'40' bits. If SCHDOPT X'20' is on, the maintask is not allowed to take zIIP work. Likewise, SCHDOPT X'40' prevents the MP subtask from taking from the zIIP subtask. So, if either bit is on, the corresponding statistic is always zero.

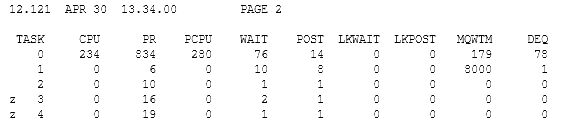

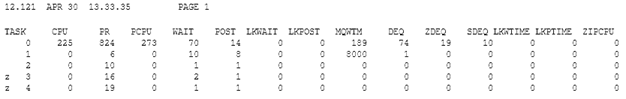

A MONITOR TASKS command displays three zIIP columns visible on screens formatted as a 3270 Model 5 terminal.

The columns are:

SDEQ, which represents the number of units of work taken from an MP subtask queue by the maintask. This column has meaning only for the maintask; for other tasks this column always contains a zero.ZDEQ, which is the number of units of work taken from a zIIP queue by either the maintask or an MP subtask. This column has meaning only for the maintask and MP subtasks. For zIIP subtasks this column always contains a zero.ZIPCPU, which represents the total CPU time (in milliseconds) consumed by this zIIP engine. It is zero for MP subtasks, and it is greater than zero when a "z" precedes the task number and that zIIP subtask has been active (AMPSUBZ>0) at some point during the run.

Examples

- This example shows the results of the MONITOR STAT command. The zIIP statistics are bolded and underlined here for visibility.

11.244 SEP 01 01.16.08 PAGE 2 AUDIT=16640 OUT=1225 IN=124 OUTXX=6836 INXX=182 DEV5=97 DEV6=581 DEV7=- 174 DEV8=30 WAIT=160665 DKRD=9078 DKWR=108152 SVRD=3766 SVWR=3775 CPU=44- 28 REQ=18 MOVE=117713 CNCT=556 SWT=19 RECADD=6362 RECDEL=10588 BADD=1443- 47 BDEL=288 BCHG=67379 IXADD=16116 IXDEL=31854 FINDS=661 RECDS=78622 DKA- R=35853 DKPR=2579487 TFMX=387 USMX=13 SVMX=6 SFTRSTRT=1 DKPRF=864643 SMP- LS=30 USRS=12933 SVAC=6000 BLKI=6000 PCPU=562 DIRRCD=29040 STCPU=3186 ST- DEQ=23899 STWAIT=30743 STPOST=23952 LKWAIT=24 LKPOST=24 STIMERS=3831 SVPA- GES=311303 COMMITS=3599 BACKOUTS=9 UBUFHWS=129448 TSMX=386 DKRDL=89 DKWRL- =9611 CPUTOTZE=5 CPUONZIP=5 MTDEQ=116771 MTZDEQ=2082 STZDEQ=506 ZTDEQ=96 DKSRR=6792 DKSAWW=296 DKSRRFND=1921 DKSTBLF=3621 DKSTBLA=8 DKSTBLB=4874 - DKSTBLC=3033 DKSTBLD=1725 DKSTBLX=48 DKSTBLE=13286

- This example shows output from a MONITOR TASKS command from the same run, using a Model 2 terminal:

- This example shows output from a MONITOR TASKS command from the same run, using a Model 5 terminal:

Balancing channel load

You can balance channel loads for z/OS, z/VM, and z/VSE systems in the following ways:

- Balance I/O traffic on channels and control units by splitting the server data sets so that they occupy portions of more than one disk unit. Server data set allocation is discussed in Allocation and job control.

- Reduce memory requirements for Model 204 by using a single server for many users through the use of swapping (see Requirements for server swapping).

Note: Server swapping substantially increases the amount of disk I/O traffic and increases CPU utilization for the run.

- If main memory is not a problem and I/O or CPU is, you can provide each user with a server (NSERVS=NUSERS). The server data set and all associated I/O are eliminated.

- In a z/OS environment, IFAM2 and remote User Language performance is improved if the four CRAM SVC load modules are made resident. If all four modules cannot be made resident, performance is improved if the 1K module, IGC00xxx, is resident and the number of SVC transient areas increased.

For more information about the CRAM modules, see the Rocket Model 204 installation page for IBM z/OS.

Reducing storage requirements

You can save a considerable amount of space by excluding unnecessary modules and relinking the various configurations of Model 204. Certain object modules are optional and can be excluded during link-editing and generation of load modules.

Reduce the size of Model 204 load modules by eliminating object modules supporting unused features. To facilitate identification of unnecessary modules, a list of object modules for each operating environment is included on the Rocket Model 204 installation pages.

Dynamic storage allocation tracking

The Dynamic Storage Allocation Tracking facility in Model 204 provides information about virtual storage allocation during Model 204 execution. When specifying region size and spare core space, take into consideration the statistics provided by the Dynamic Storage Allocation Tracking facility.

VIEW statistics

To display the following statistics during execution, issue the VIEW command. To display individual values, specify the value in the VIEW command, such as VIEW STORMAX, or specify the ALL or SYSTEM option, such as VIEW ALL.

| Statistic | Meaning |

|---|---|

| STORCUR | STORCUR is the number of SPCORE storage bytes that are currently allocated. In z/OS, STORCUR includes storage above the line as well as below. It is possible for more storage to be freed than obtained post initialization, and a negative number in STORCUR is possible. |

| STORINIT | The amount of storage (in bytes) allocated during initialization. The STORINIT value does not include SPCORE space and the space for the Model 204 nucleus (ONLINE load module). |

| STORMAX | The highwater mark of STORCUR. |

User interfaces

The user interface to dynamic storage tracking includes a message written to the audit trail and the CCAPRINT data set. To display parameter values during execution, issue the VIEW command.

The following message is displayed in the audit trail and the CCAPRINT data set:

M204.0090 DYNAMIC STORAGE ACQUIRED DURING INITIALIZATION=nnnnnn, AFTER INITIALIZATION=nnnnnn

Where:

- The first value displayed is the amount of virtual storage acquired during system initialization. This value represents the minimum amount of storage needed for the parameters (except for SPCORE) specified in the CCAIN input.

- The second value is the highwater mark of storage acquired during operation after the initial allocation.

Additional storage can be acquired by some operating systems for control blocks and I/O areas (most notably z/VSE). Storage of this nature is not accounted for in Model 204 statistics.

Types of storage acquisition

Activities that can cause the operating system to get storage areas include:

- Using READ IMAGE from a VSAM data set (z/OS and z/VSE)

- Using sequential disk data sets through the USE command and SOUL READ IMAGE and WRITE IMAGE statements

- CMS interface storage acquisition

- Using FLOD exits

- Using the Cross-Region Access Method (see CRAM IFSTRT protocol IFAM2 (IODEV=23) requirements)

- Using external security interfaces (see Category:Security interfaces)

Cache fast write for CCATEMP and CCASERVR

The optional use of cache fast write is allowed for two heavily used data sets, CCATEMP and CCASERVR. The cache fast write feature, available on cached DASD controllers (such as IBM 3990 models 3 and 6) allows data to be written directly to a controller's cache. A write is considered successful as soon as it is accepted by the cache.

When the cache capacity is exceeded, parts of the data are downloaded to disk. Upon cache or DASD failure the disk image is not guaranteed; but since temporary and server files are rebuilt during initialization, cache fast write provides increased performance with no integrity exposure.

To enable cache fast write, set the system initialization parameter:

CACHE=X'nn':

where the valid values of nn are:

| Value | Use cache fast write for... |

|---|---|

| '00' | Do not use; the default. |

| '01' | CCASERVR access |

| '02' | CCATEMP access |

| '03' | Both CCATEMP and CCASERVR access |

Cache fast write options are selectable only at initialization and cannot be modified during the run.

Sequential processing

You can maximize sequential file processing performance by using file skewing (obsolete) or the Prefetch feature.

Prefetch feature

The Prefetch feature can improve performance of Model 204 for record-number order retrieval of a record set, particularly in a batch environment. Prefetch is for User Language applications only and applies only to Table B. It is not supported for Host Language Interface applications.

The Prefetch feature initiates a read of the next Table B page when a previous page is first declared to be current. The look-ahead reads are issued for the FOR EACH RECORD sequential record retrieval mode. Look-ahead read is suppressed if the FR statement contains an IN ORDER clause or if it reference a sorted set.

Performance gains resulting from the Prefetch feature vary, depending on the environment in which a run occurs.

For complete information on Prefetch, see The prefetch (look-ahead read) feature.

How to use Prefetch

To use the Prefetch feature:

- Resize the MAXBUF parameter, based on the following formula:

MAXBUF = NUSERS * (4 + 2 * (Maximum FOR EACH RECORD loop nest level))

- Turn on the Prefetch feature by setting the SEQOPT parameter to 1 on the User 0 line.

The Prefetch feature can also be controlled with the RESET command issued by a user having system manager privileges.

Long requests

You can trap and evaluate unexpectedly long requests from Online users before the resources required to honor the request are used. Set the parameters listed in the following table to define the maximum amounts of resources a request can use. Default settings are extremely large. A user can reset the parameters if the request is valid and exceeds the settings provided.

| Parameter | Setting | Meaning |

|---|---|---|

| MCNCT | 300 | Maximum clock time in seconds |

| MCPU | 20000 | Maximum CPU time in milliseconds |

| MDKRD | 500 | Maximum number of disk reads |

| MDKWR | 250 | Maximum number of disk writes |

| MOUT | 1000 | Maximum number of lines printed on the user's terminal |

| MUDD | 1000 | Maximum number of lines written to a directed output (USE) data set |

If any maximum is exceeded, Model 204 pauses, displays the amount used, and issues messages that provide the option to continue or cancel the request. If the request is running under an application subsystem, it is canceled if any maximum is exceeded.

You can set the SCHDOPT parameter to X'10' to use the CSLICE parameter to verify that long request values are not exceeded.

IFAM2 control

Host Language Interface application programs in execution can dramatically affect system performance as seen by other IFAM programs or Online Model 204 User Language users. The amount of processing required for IFAM loops, hard-wait states, or noninteractive batch mode execution make it difficult to detect when the use of resources is excessive and service to other users degrades. Long request parameters are not available to Host Language Interface jobs.

Use the following commands to monitor and control the IFAM2 threads (IODEV=23 and IODEV=43).

The following considerations apply:

- If possible, initialize every IFAM2 service program with support for at least one terminal. This allows use of the IFAM2 command facility.

- To avoid entering a line for infrequent monitoring purposes, specify User Language connections from host language programs to Model 204 in the IFAM/Model 204 service program for a teleprocessing monitor.

- If you issue one of the IFAM system control commands when IFAM2 is not active (no IODEV=43 statements in CMS, no IODEV=23 statements in z/OS or z/VSE), an error message is displayed.

- When using the IFAM control commands, if the CRAM argument is not specified and if any CRAM threads are defined, the CRAM channel is assumed.

If CRAM threads are not defined, the IUCV channel is assumed.

SQL system and user statistics

This section describes Model 204 statistics that are specific to Model 204 SQL processing. For information on SQL Server IODEV threads, see SQL server threads (IODEV=19).

SQL statement statistics

Model 204 SQL processing generates a since-last statistics line after each Prepare, Execute, Open Cursor, terminate, and log-off.

The PROC field in the statistics output contains the SQL statement name. The LAST label indicates the type of statement:

- LAST = PREP indicates an SQL Prepare statement (compilation of an SQL statement), or compilation phase of Execute Immediate.

- LAST = EXEC indicates one of the following:

- SQL Execute (execution of a compiled statement)

- Execution phase of Execute Immediate

- Open Cursor (execution of the SELECT statements associated with a cursor) statement

- SQL user log-off

HEAP and PDL highwater marks

The HEAP statistic indicates the highwater mark for dynamic memory allocation (malloc) for processing of C routines. The SQL compiler/code generator uses this space. The SQL evaluator also uses the C heap for its runtime stack. Because dynamic memory is allocated before the system calls the SQL evaluator, the HEAP highwater mark does not change during a unit of SQL statement execution.

For user subtype '01' entries, the offset of the HEAP statistic is X'58', immediately following the OUTPB statistic. This statistic is also dumped by the TIME REQUEST command.

The PDL statistic, the pushdown list highwater mark, is checked and updated more often for SQL processing.

Interpreting RECDS and STRECDS statistics

The RECDS statistic (also used to count records processed by Model 204 FOR and SORT statements) indicates the number of physical cursor advances in an SQL base table and the number of records input to sort. The STRECDS statistic indicates the number of records input to SQL sort.

Multiple fetches of the same physical record without intervening cursor movement count as one read. For example, a sequential fetch of several nested table rows within a parent row increments the RECDS statistic by one.

Output sort records from SQL sort are not counted in the RECDS and STRECDS statistics.

Interpreting the PBRSFLT statistic

The Model 204 SQL sort uses a DKBM private buffer feature to increase the number of concurrent open buffers a user can hold. Use of the private buffer requires prior reservation. The PBRSFLT statistic indicates how many times a user failed to get a private buffer reservation.

In Model 204 SQL, reservation of this buffer is conditional, and the PBRSFLT counter is changed incrementally only for unconditional reservations. Therefore, PBRSFLT is always zero for current SQL users. Failure to obtain this buffer is not reflected in the statistic, but rather is indicated by a negative SQL code and message.

In the record layouts, PBRSFLT follows the SVPAGES statistic and is generated for system subtypes '00' and '01' and user subtypes '00' and '02'. For example, the offset of PBRSFLT for system type '00' entries is X'1E0'.

Interpreting the SQLI and SQLO statistics

The SQLI and SQLO since-last statistics record the highwater marks for the SQL logical input and output record lengths, respectively. They indicate the size of the largest SQL request to the Model 204 SQL Server and the size of the largest response from the SQL Server.

You can use SQLI and SQLO to size the buffers that receive and transfer Model 204 SQL requests and responses. These buffer sizes are set by the Model 204 CCAIN parameter SQLBUFSZ and the Model 204 DEFINE command parameters INBUFSIZE and DATALEN.

SQLBUFSZ must be greater than or equal to the maximum SQLI. The SQLI and SQLO highwater marks appear in since-last statistics and TIME REQUEST. They appear in the user since-last subtype X'01' statistics record at offset 92(X'5C') and 96(X'60'), respectively.

You can use both SQLI and SQLO to set INBUFSIZE and DATALEN to either minimize wasted buffer space or reduce traffic across the Model 204 SQL connection.

z/OS recovery

In z/OS, you can improve recovery performance by specifying the number of channel programs (NCP) and number of buffers (BUFNO) parameters in the DCB specifications for CCARF and RESTART streams.

- Multiple channel programs allow the initiation of multiple input requests at one time.

- Multiple input buffers allow input data to be processed with less I/O wait time.

Do not set BUFNO less than NCP:

- If BUFNO is less than NCP, Model 204 defaults BUFNO to the value of NCP.

- If BUFNO is set to a high value, SPCORE requirements are significantly increased.

- Rocket Software recommends that you set BUFNO to one more than the NCP value for more overlapped processing. For example:

//CCARF DD DSN=M204.JRNL1,DCB=(NCP=3,BUFNO=4),DISP=OLD //RESTART DD DSN=M204.CHKP1,DCB=(NCP=7,BUFNO=8),DISP=OLD

Resident Request feature for precompiled procedures

QTBL (the internal statement table for Model 204 procedures) and NTBL (which contains statement labels, list names, and variables) can occupy more than 40% of a user's server. Users executing shared precompiled procedures can reduce CCASERVR and CCATEMP I/O by using shared versions of NTBL and QTBL in 31-bit or 64-bit virtual storage. If this storage is exhausted, Model 204 attempts to use storage below the line.

STBL, NTBL, and QTBL reside at the end of the server. When a precompiled procedure incurs a number of server writes exceeding the value of RESTHRSH, NTBL and QTBL are copied into virtual storage rather than into the user's server space. Then another user executing the same procedure can use the copies of NTBL and QTBL that are already in storage, which considerably reduces server I/O every time a new user runs the request.

The following system parameters allow system managers to control and monitor resident requests:

- RESPAGE is a resettable User 0 parameter that defines the maximum amount of 64-bit virtual storage (in 4K-byte pages) to allocate for resident requests. The default is 0.

- RESSIZE is a resettable User 0 parameter that defines the maximum amount of 31-bit virtual storage (in bytes) to allocate for resident requests. This storage is acquired dynamically on a procedure-by-procedure basis. The default is 0.

- RESTHRSH is a resettable User 0 parameter indicating the number of server writes that a request incurs before Model 204 saves its NTBL and QTBL in resident storage, assuming enough appropriate (64-bit or 31-bit) virtual storage is available. The default is 100.

- RESCURR is a view-only system parameter indicating the amount of virtual storage (in bytes) that is currently allocated to save resident NTBLs and QTBLs when running precompiled SOUL requests.

- RESHIGH is a view-only system parameter indicating the high-water amount of virtual storage (in bytes) allocated in this Model 204 run to save resident NTBLs and QTBLs when running precompiled SOUL requests.

Performance considerations

The implementation of the Resident Request feature includes a mechanism (PGRLSE) for releasing unused storage associated with QTBL. This release storage strategy can have the effect of reducing Model 204 address space requirements for large Onlines, even if there is no server swapping.

Once a request is resident, it remains resident until the application subsystem is stopped. However, switching into nonresident mode (which disables the page release mechanism) can occur when:

- The next request in the program flow (determined by a global variable) is not resident.

- Users are swapped, and an inbound user is in nonresident mode.

- A request executes a Table B search or evaluates a Find statement using a NUMERIC RANGE index. In this case, the request is loaded into the user's server to complete evaluation, but the resident copy remains.

The Resident Request feature is most effective when there is very little switching back and forth between resident and nonresident mode. Because frequent switching to nonresident mode decreases the overall effectiveness of the page release strategy, Model 204 automatically turns off the page release mechanism if the number of switches within a given subsystem exceeds 25% of the number of resident request executions. If the number of switches drops below 25%, then the page release mechanism is turned back on.

Storage protection for z/OS

If you want resident NTBLs and QTBLs to be storage-protected under z/OS, you must install M204XSVC. For more information, refer to

- Labels, names, and variables table (NTBL)

- Internal statement table (QTBL)

- Activating IOS Branch Entry

SVPAGES statistic

To monitor the amount of data transferred as a result of server reads and writes, the SVPAGES statistic is available under the following journal entries:

You can also view these statistics Online by using the MONITOR STAT and MONITOR SL commands.

Analyzing MONITOR command output

The following sample output shows how you can analyze resident request performance:

MONITOR SUBSYS (PROCCT) subsysname: SUBSYSTEM: PDS NUMBER OF PRECOMPILABLE PROCEDURES (SAVED): 15 NUMBER OF PRECOMPILABLE PROCEDURES (NOT SAVED): 34 NUMBER OF NON-PRECOMPILABLE PROCEDURES: 14 NUMBER OF REQUESTS MADE RESIDENT: 4 NUMBER OF ELIGIBLE REQUESTS NOT RESIDENT: 0 STORAGE USED FOR RESIDENT REQUESTS: 290816 NUMBER OF RESIDENT PROCEDURE EVALS: 110 NUMBER OF SWITCHES FROM RESIDENT MODE: 64

The "precompilable procedures not saved" have not been saved, because they have not been compiled or evaluated yet or, if they have, have not yet been involved in the number of server swaps specified by the threshold, RESTHRSH.

If "eligible requests not resident" is nonzero, this indicates that not enough RESSIZE or RESPAGE was available to make them resident.

"Number of switches from resident mode" indicates the number of times the NEXT global variable invoked a request that was not resident, or the number of times the procedure being evaluated encountered a direct Table B search or a NUMERIC RANGE field and had to be loaded into the user's server to complete evaluation.

Multiprocessing (MP/204)

MP/204, the Model 204 multiprocessing facility, makes full use of multi-processor configurations on IBM mainframes and compatibles running z/OS.

With MP, a single Online can access several processors simultaneously, allowing user requests to execute in parallel. Parallel processing increases throughput by giving the Model 204 address space additional CPU resources. An Online configured for MP can handle more volume in a fixed amount of time, or reduce response time for a fixed amount of work.

An MP Online performs parallel processing by distributing work between a maintask and multiple z/OS subtasks, which are attached during initialization. The subtasks (also called offload tasks) execute segments of evaluated code, including most SOUL constructs. Certain activities can be performed only by the maintask; for example, external I/O including CHKPOINT and CCAJRNL.

The Model 204 scheduler and evaluator control the movement of work between the maintask and subtasks. The evaluator identifies constructs that can be offloaded, and requests task switching accordingly. Users ready for task switching are then placed on the appropriate queue. For example, when a subtask has finished executing a User Language FIND statement, the user is placed on a queue of users waiting to return to the maintask.

The amount of throughput gained by using the MP feature depends on several factors, including CPU resources available, the amount of work that can be offloaded, and the benefit of offloading compared to the cost of task switching. This section explains how to activate the MP feature, analyze performance statistics, and set MP tuning parameters.

CPU accounting

If your installation uses Timer PC, disable it before running an MP Online. This facility is incompatible with Model 204 MP implementation, which uses the standard z/OS task timer for CPU accounting.

Activating MP

Once MP/204 is installed, the actual use of offload subtasks is optional. To activate multiprocessing, set the following system parameters on User 0's parameter line:

- The NMPSUBS parameter determines the number of offload subtasks attached during initialization. This is normally set to a number less than the number of available processors. The maximum value allowed is 32.

For example, the normal setting of NMPSUBS for a three-processor hardware configuration is 2.

- The AMPSUBS parameter determines the number of active subtasks (subtasks that actually perform offloaded work). The AMPSUBS setting must be less than or equal to the value of NMPSUBS.

Unlike NMPSUBS, AMPSUBS can be reset by the system manager at any time by issuing the command

RESET AMPSUBS n, where n is the desired number of active subtasks. This is useful for adjusting for fluctuating system usage, or for determining the optimum number of active subtasks by experiment.It is also possible to turn off MP (in effect) by resetting AMPSUBS to the value 0. When AMPSUBS is 0, no work is offloaded (all work is performed by the maintask).

If you are using MP/204, consider Using unformatted system dumps (z/OS).

In addition to the basic MP parameters, the SCHDOPT and MPOPTS parameters are useful for system tuning. For details, see Scheduler operation and accounting (SCHDOPT) and Optimization using MPOPTS and MP OPTIONS.

The SCHDOFL parameter can also be used for system tuning, as needed, although the default value is recommended in most cases. For details, see Scheduler tuning (SCHDOFL).

MP performance and tuning

MP statistics

The following list of system final and partial statistics measure various aspects of MP performance. STCPU and STDEQ are also maintained as user final, partial, and since-last statistics.

- CPU

- DKPR

- DKPRF

- LKPOST

- LKPTIME

- LKWAIT

- MPLKPREM

- MPLKWTIM

- MQWTM

- PCPU

- PR

- STCPU

- STDEQ

- STPOST

- STWAIT

These statistics are all written to the journal data set (CCAJRNL).

- For a description of each statistic see the "Statistics with descriptions" table in the Using system statistics section.

- For the journal record layout of MP statistics see the "Layout of user subtype X'81' entries (CFRJRNL=1)" table in the Conflict statistics section.

Using MP statistics

- The CPU, PCPU, STCPU, and STDEQ statistics are important indicators of CPU utilization and task-switching overhead. These statistics are discussed separately in the next section.

- LKPOST and LKWAIT measure overhead associated with MP locks, that is, MP-specific mechanisms to regulate multiple users' access to facilities such as the disk buffer monitor.

You can change the number of spins that can be taken when MPLOCK is not available before going into wait by adjusting the setting of the MAXSPINS parameter.

Rocket Software recommends that you test the setting of the MAXSPINS parameter at your site. Increase the value of MAXSPINS, watching for a drop in the values of the LKWAIT and LKPOST statistics. When the values of those statistics stop dropping, that's an indication that you've found a good setting for the MAXSPINS parameter.

- STWAIT and STPOST measure the number of WAIT and POST operations associated with MP subtask switching, and are, therefore, indicators of one component of MP overhead.

Fast logical page reads

A logical page read (DKPR) is a moderately expensive operation, especially in an MP/204 environment. Often a page is logically read repeatedly; each logical read incurs the same overhead as the previous logical read.

Logical page reads are optimized; a popular page is kept pending or in deferred release after use. If a page is open when a logical page read is done for it, the logical page read can be done very quickly. This type of logical page read is called a fast read, because of the shorter path length.

A fast read is tracked with the DKPRF statistic. A fast page read does not increment the DKPR statistic.

The relative ratio of fast reads to standard page reads might be improved by increasing the value of the MAXOBUF parameter and by setting the SCHDOPT X'04' bit.

Viewing MP statistics

In addition to analyzing the journal statistics described above, the system manager can view task-specific MP statistics directly by issuing the MONITOR TASKS command.

Analyzing CPU utilization (CPU, STCPU, and STDEQ)

A logical first step in evaluating MP performance is to process a fixed amount of work with MP turned on and MP turned off, and then to compare results.

Normally the MP run consumes more total CPU than the non-MP run due to MP overhead, that is, extra CPU resources required for MP-specific activities such as task switching and MP resource locking.

If MP is working effectively, MP overhead is outweighed by the amount of processing that is performed in parallel by offload subtasks. Therefore, to analyze and tune MP, STCPU and related statistics must be considered in relation to the system and user CPU statistics.

Suppose, for example, that your comparison runs generate the following statistical data:

MP Turned Off: MP Turned On: CPU = 10000 CPU = 11000 STCPU = 7000

These statistics show that your Model 204 application is a good candidate for multiprocessing. You can estimate total MP overhead by comparing CPU statistics: here, it is 11000 - 10000 = 1000 milliseconds. As the STCPU statistic shows, 7000 milliseconds of Online processing is actually performed by offload subtasks; therefore, the actual CPU usage by the maintask is 11000 - 7000 = 4000 milliseconds.

In the example above, the Model 204 application requires 10000 CPU milliseconds for processing (not counting MP overhead). The MP maintask does 4/10 of the total processing required. Therefore, MP throughput might improve by a factor of 10/4 = 2.5.

Analyzing throughput

Use the following formula as an approximate measure of the throughput potential of a Model 204 MP application:

NonMP CPU / (MP CPU - STCPU)

The throughput improvement actually achieved might differ from the throughput potential if any of the following constraints are present:

- Limited processor availability

If, for example, the throughput potential of an application is 2.5, at least three processors are needed to achieve it. If only one offload subtask is doing 60% of the work, then throughput is no better than it would be if the maintask were doing 60% of the work.

- CPU contention with other jobs

- Resource and locking bottlenecks in Model 204

- I/O bottlenecks (many I/O activities must be performed by the maintask)

STDEQ and STCPU

Another statistic useful for gauging subtask utilization is STDEQ, which counts the number of times that an MP subtask took a unit of work from the MP subtask queue and processed it. The ratio STCPU/STDEQ is proportional to the number of offloaded instructions executed per time; so in tuning an MP application, it is a good sign if STCPU/STDEQ increases.

MP overhead

MP overhead (estimated by subtracting CPU without MP from CPU with MP) can be a performance concern for two reasons. First, overhead increases CPU demand for the processor configuration, which might be required to perform work other than Model 204 Online processing. Second, MP overhead can have a negative effect on response time.

The two main sources of MP overhead are MP locking and task switching:

- Locking overhead increases with CPU utilization. Most MP lock contention is due to buffer pool activity (measured by the DKPR, DKRD, and DKWR statistics, described in Disk buffer monitor statistics and parameters). Disk reads and writes (DKRD/DKWR) can be reduced by increasing the size of the buffer pool. The relative proportion of contention due to page requests (DKPR) vs. disk reads and writes depends upon the particular application and configuration.

- Task switching can be affected by a variety of strategies open to the system manager and applications programmer. These options are discussed in the next two sections.

Spinning on an MP lock

The MPSYS parameter keeps a thread spinning on an MP lock even if it appears that the task holding the lock cannot be dispatched. MPSYS=X'01' causes Model 204 to spin, which means loop on an attempt to get an MP lock such as the lock for the:

- Buffer pool

- Record locking table

- LRU queue, and so on

This loop repeatedly tries to acquire the lock by running for MAXSPINS times before issuing an operating system wait. Without MPSYS=1, Model 204 immediately issues an operating system wait if the lock is unavailable.

The overhead of issuing an operating system wait is significant. It is usually much higher than the overhead of spinning even 200 times. In most cases, Model 204 does not spin or loop 200 times before the lock becomes available. You will have saved significant overhead compared to issuing an operating system wait.

Waiting on an MP lock

You can track MP lock waits with the MPLKPREM and MPLKWTIM statistics.

- MPLKPREM: Total elapsed time in milliseconds, across the maintask and all subtasks, the Online spent waiting due to operating system preemption.

This is the elapsed time between when an MP lock becomes available (lock post) making a task ready to run, and when the task actually gets the CPU. That preemption delay is caused by the operating system dispatching other tasks ahead of this task.

- MPLKWTIM: Total elapsed time in milliseconds, across the maintask and all subtasks, the Online spent waiting for MP locks.

Waiting on the operating system

LKPTIME: Lock preemption time — Total elapsed time in milliseconds, this task spent waiting, due to operating system preemption.

This is the elapsed time between when an MP lock becomes available (lock post) making the task ready to run, and when the task actually gets the CPU.

That preemption delay is caused by the operating system dispatching other tasks ahead of this task.

SOUL considerations

When tuning an MP Online, it is useful to measure throughput potential for both frequently executed User Language requests and for the application as a whole. Programs can vary considerably in their ability to benefit from MP. Read-intensive applications can have very high throughput potential. Update-intensive applications tend to have lower potential, because most update operations cannot be offloaded. Also, specific rules govern offloading of structures such as loops, subroutines, and $functions.

The MPOPTS parameter and the MP OPTIONS SOUL statement allow the programmer or system manager to specify which program structures can be offloaded.

These options can have a direct bearing on task switching overhead, because excessive overhead is generally caused by offload subtasks executing too few instructions to justify the cost of task switching. If certain offload strategies are not working efficiently, they can be disabled to reduce overhead.

Rules governing offloading, MPOPTS, and MP OPTIONS are discussed in Optimization using MPOPTS and MP OPTIONS.

Scheduler operation and accounting (SCHDOPT)

The Model 204 scheduler is responsible for task switching. The cost of each task switch tends to go up when subtask utilization goes down. When the amount of work in the subtasks is low, then z/OS WAIT and POST services are needed to complete the task switch. This can increase the cost per transaction; but this is usually an unimportant consideration when the system is lightly loaded.

The SCHDOPT parameter is available under z/OS and z/VM.

The default behavior of the Model 204 scheduler is to minimize overhead by switching users to offload subtasks only when other work is waiting for the maintask. This approach involves a trade-off between overhead and response time: with scheduler optimization, the maintask is often not available to process newly posted users as soon as possible.

If your processor complex has some excess CPU capacity, then you can improve response time by turning off scheduler optimization. Do this by setting the X'02' bit of the User 0 parameter SCHDOPT. With this setting, all possible work is offloaded, regardless of the current load on the maintask.

When scheduler optimization is turned off, it might be desirable to increase the values of the NMPSUBS and AMPSUBS parameters. If a trial run with SCHDOPT='02' indicates that with n active subtasks, the maintask is consuming less than 1/n of the Online CPU, add 1 to the value of NMPSUBS and AMPSUBS.

Note: Optimization must be turned off (setting SCHDOPT='02') in order to perform meaningful throughput potential analysis.

SCHDOPT settings X'00' to X'08' inclusive are used to control the maintask scheduler operation and account with MP/204.

| Setting | Purpose |

|---|---|

| X'00' | Changes the way since-last statistics are computed. X'00' is the default setting. This setting can make it appear that users are using more CPU time than is actually the case. If you want Model 204 to compute user since-last statistics without scheduler overheard, reset SCHDOPT to X'01'. |

| X'01' | Model 204 tracks the maintask scheduler overhead and generates the SCHDCPU statistic. This setting is used when the system manager does not want MP users to be charged for scheduler overhead. |

| X'02' | Model 204 forces all processing that can be performed in parallel to run in an offload subtask, even if the maintask is not busy. The X'02' bit can be set whether server swapping is going to disk or to memory. It is most useful when swapping to a dataspace, because moving large amounts of data back and forth from a dataspace can be somewhat CPU intensive and justifies the cost of offloading the work to an MP subtask. However, keep in mind that setting the X'02' bit can introduce a slight delay into server swapping requests, although this is probably negligible. |

| X'04' | Model 204 defers closing disk pages on a critical file resource and other short-lived waits until the thread is actually swapped. Usually, when a swappable wait is done, Model 204 closes all still-open disk pages for that thread as quickly as possible. However, critical file resource waits tend to be short-lived, making this prompt page closing less efficient.

|

| X'08' | This setting is dependent on the setting of AMPSUBS; it has no effect if AMPSUBS=0. However, if you set AMPSUBS to greater than 0, then server swaps are performed in an MP subtask, if the maintask has any other work to do.

Note: As of version 7.6 of Model 204, this setting is disabled (has no effect). |

Because the difference between doing a fast read of a deferred release page and a regular logical page read is not that great, Rocket Software recommends that you adjust the SCHDOPT parameter X'04' setting only if you work in an MP/204 environment. Adjusting it does not have significant impact in other environments.

Scheduler tuning (SCHDOFL)

The SCHDOFL parameter indicates the target number of threads on the MP offload queue per active task. Setting SCHDOFL makes the Model 204 MP subtask scheduler more responsive to instantaneous increases in load.

SCHDOFL has a default value of 2, meaning that if there are more than twice as many units of MP offloadable work waiting to be processed in an MP subtask as there are active subtasks, and fewer than AMPSUBS subtasks are currently running, another MP offload subtask (not to exceed AMPSUBS) is activated.

Subtasks are deactivated, but remain available, when they have no work to do. This deactivation helps minimize MP overhead.

The default SCHDOFL value of 2 is recommended in most cases:

- Setting the value to 1 potentially increases throughput but also increases overhead, which is not recommended unless your site has plenty of spare CPU capacity.

- A value of 0 is not recommended because it will likely result in the unnecessary activation of MP subtasks — by the time some of them are dispatched, other MP subtasks will have handled all pending units of work.

- A value greater than 2 might decrease CPU overhead while reducing throughput by reducing parallelism.

MP SOUL statement processing

In an Online configured for MP, the SOUL evaluator and Model 204 scheduler make decisions about what work to execute on the maintask and what work to offload to the subtasks.

The SOUL programmer does not need to code special statements in order to make use of MP/204. However, different program designs do have different MP-related consequences. Therefore, measure and tune the MP performance of frequently executed requests at your Model 204 installation.